openpilot 0.10

What comes after 0.9? After three years on the 0.9 series, we welcome you to the 0.10 series.

openpilot 0.10 is defined by its entirely new training architecture. While it’s a new stack, we focused on preserving the reliability of the previous generation models, so this release should feel familiar. In the upcoming releases, we’ll work towards openpilot 1.0, where end-to-end longitudinal control graduates from Experimental to Chill mode.

New Driving Model

This release’s model includes three major changes in training:

- A completely new end-to-end training architecture and supervision using our new World Model: Tomb Raider (#35620)

- Improved Lead Car detection: Space Lab (#35816)

- Use of lossy image compression during training in preparation for using images from the new Machine Learning Simulator: Vegan Filet-o-Fish (#35240)

Space Lab 2 🛰️🛰️ (#35816), the final model, includes all three changes and should provide a noticeable performance improvement while still feeling very familiar and reliable.

The new model has noticeably improved reactivity around parked cars.

World Model Planner

In this release we ship a completely new end-to-end architecture, a.k.a. Tomb Raider (#35620). In previous releases, the driving model predicted a path it thought a human would be driving in the current scene. This meant predicting a state (position, speed, etc.) different from your current state. It was then up to an MPC system to generate a feasible trajectory to get to that state.

This MPC system required careful tuning to be just the right amount of aggressive. We removed the MPC for lateral in a previous release, but at that time we just moved it to the training side, where it was still used to supervise the model to provide ground-truth actions.

A simulation rollout showing the human-driven path in blue, the prediction of the human path by the previous release’s model in green, and finally the new model’s prediction in orange. Note that the orange path starts at your current position and shows a clean convergence to the center of the lane.

In this release, the MPC systems are removed entirely, both in training and inference, for lateral (Chill and Experimental mode) and longitudinal (Experimental mode) control. Training a model to predict a trajectory that goes from the current state to the human predicted state is difficult. We do this by supervising the driving model with a World Model during training that has knowledge of the future. This architecture is the subject of our most recent research paper published in the Computer Vision and Pattern Recognition Conference (CVPR).

The learned World Model has several advantages over the MPC systems it replaces. Most notably we expect a significant improvement in longitudinal control in Experimental mode for this initial release. This change is also a prerequisite for future improvements.

Improved Lead Car detection in stop and go

The Chill mode longitudinal policy, however, still relies on a classical lead policy. Even though we plan to replace it with the end-to-end policy from Experimental mode, we decided to make some small improvements in this release.

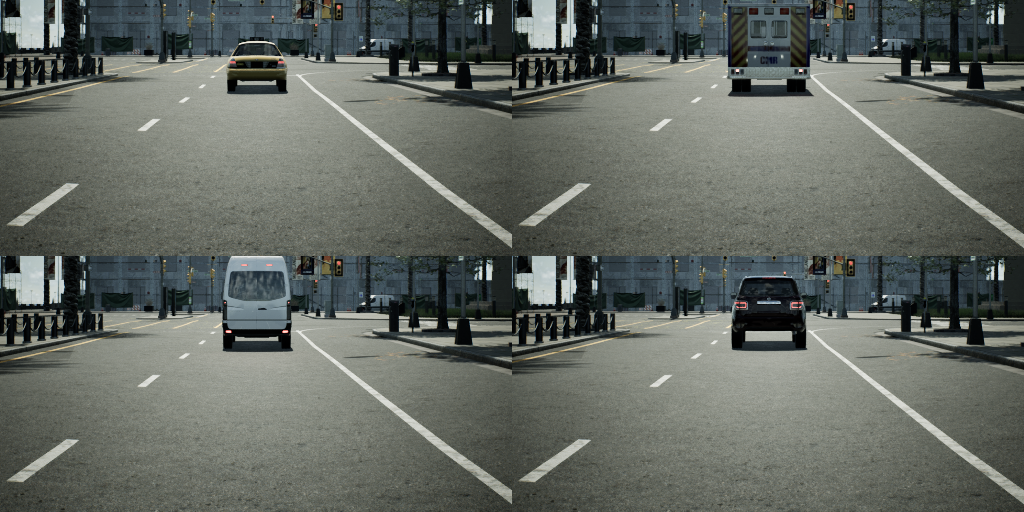

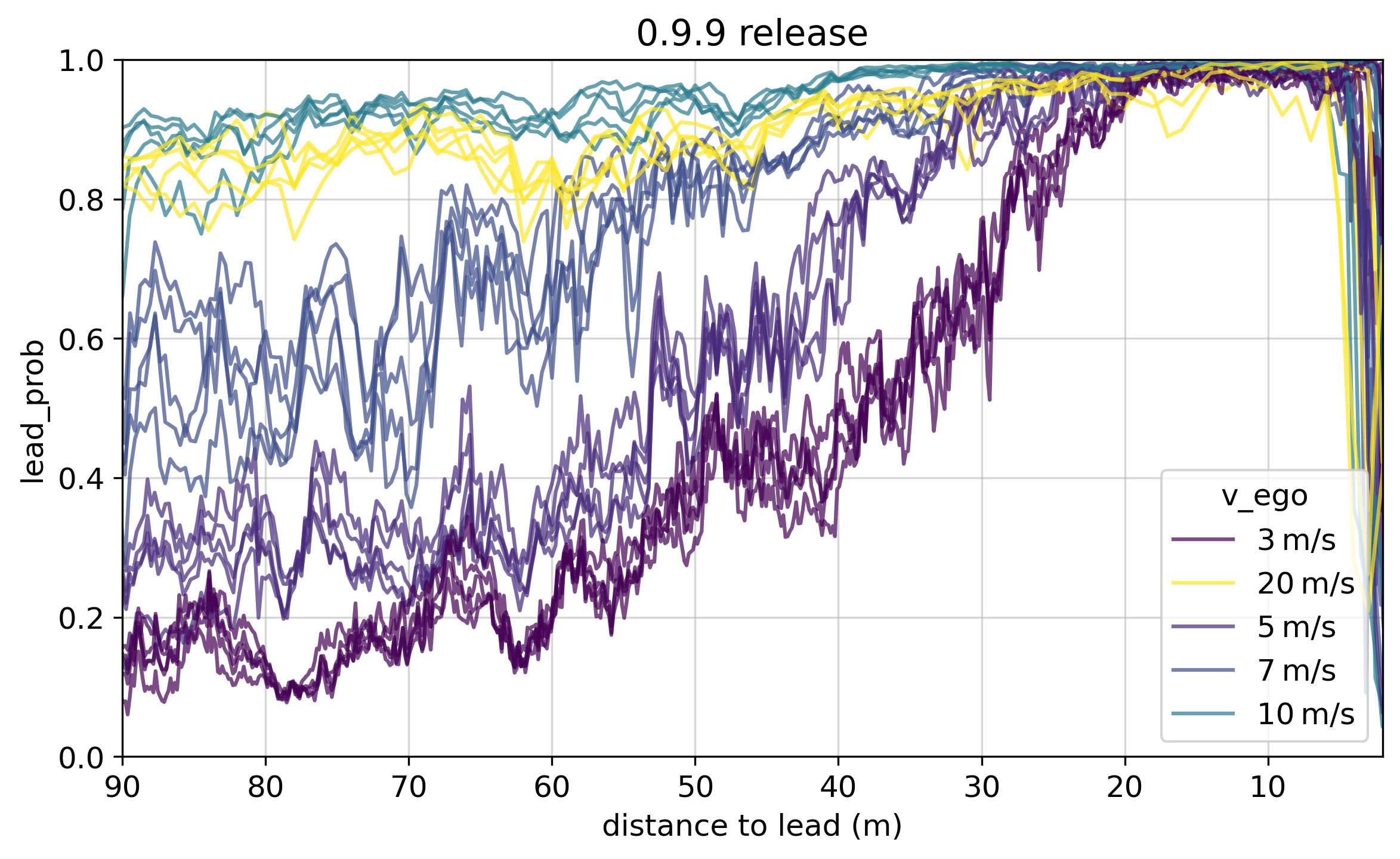

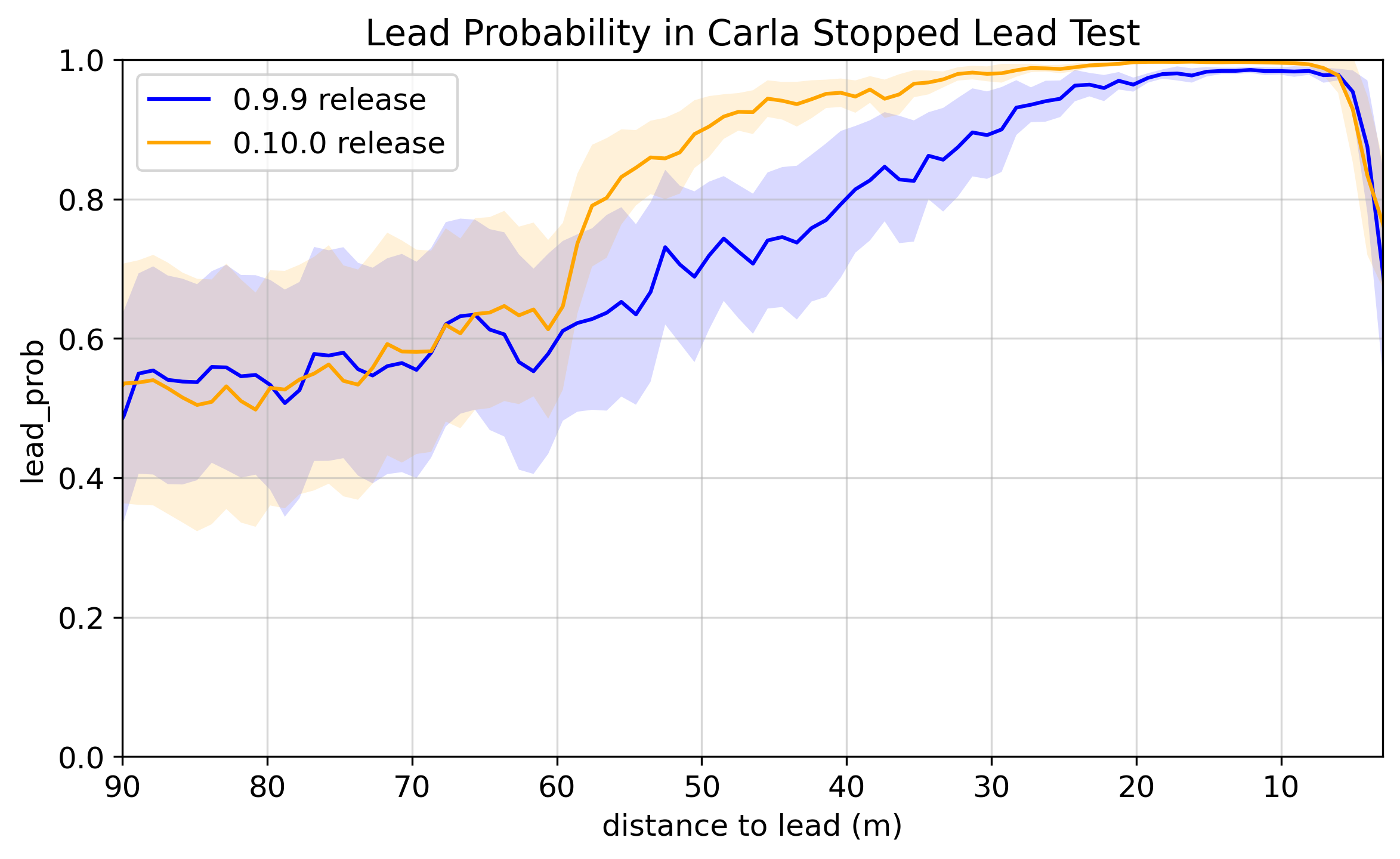

Space Lab (#35816) significantly improves stopped lead car detection, especially when approaching at low speed. We achieve this by adjusting our lead data filtering logic for stop and go situations, which decreased the number of ignored low speed frames from 78% to 52% for the lead output training.

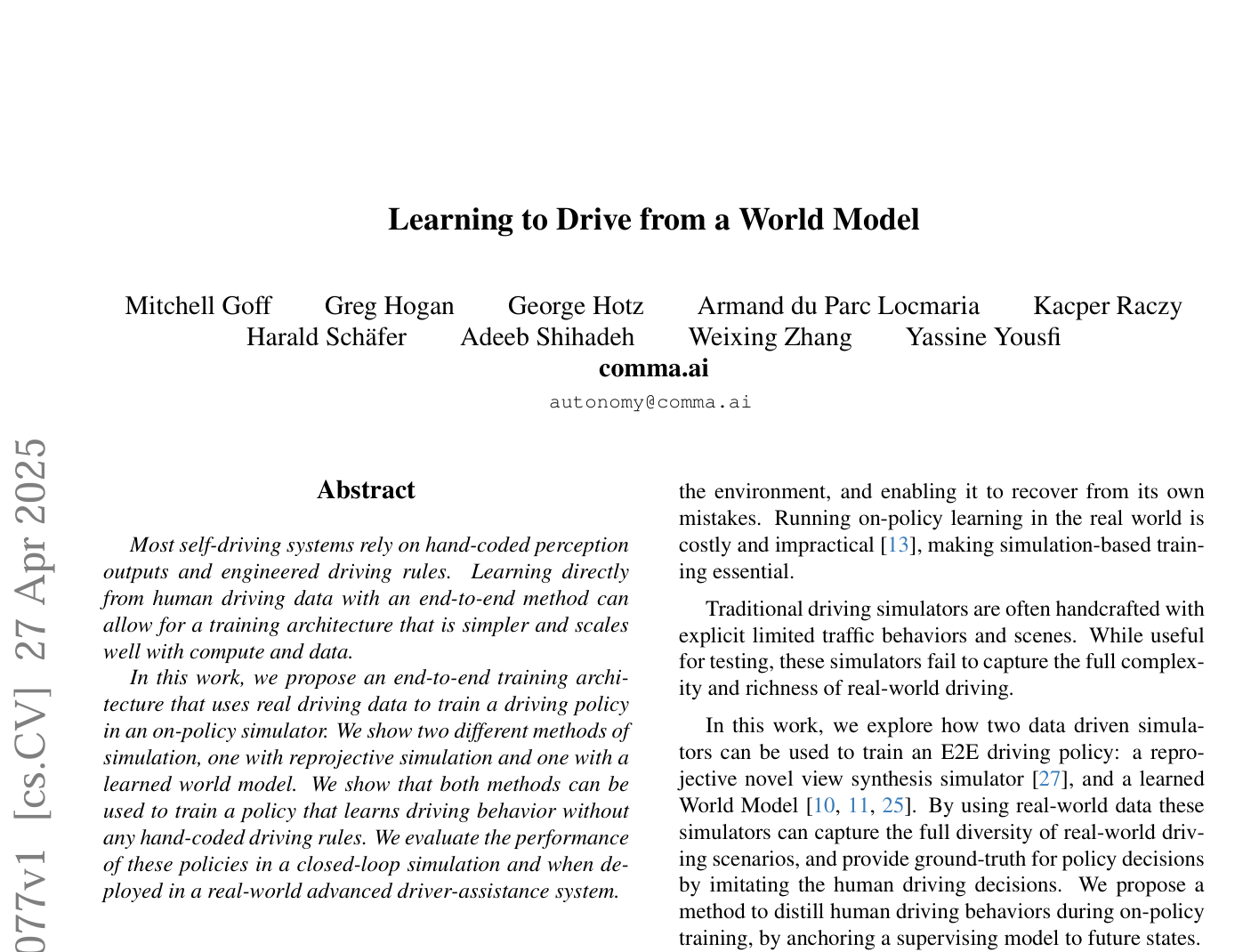

Examples of frames where the lead was previously ignored

In addition to our real life segments metrics, we tested this change using 25 CARLA simulator scenarios where the ego car approaches a stopped lead car at various speeds.

Examples from the CARLA Stopped Lead Test scenarios

Lead Probability Model outputs for all 25 CARLA Stopped Lead Test scenarios

Average and Spread of Lead Probability Model output CARLA Stopped Lead Test scenarios

VAE compression

The ML Simulator is a diffusion model operating in a latent space. Pairs of wide and narrow road-facing images are encoded as 32x16x32 tensors using a custom Encoder/Decoder Neural Network (VAE). Although this encoding is lossy, it provides a compact representation of driving scenes, which is essential to training good and efficient generative models.

Vegan Filet-o-Fish (#35240) is trained with VAE compressed frames coming from the reprojective simulator, which mimics the loss of image details occurring when training with frames produced by the ML Simulator. This change validates that the compression loss is negligible and does not degrade the policy’s quality.

Example driving scenes before (left) and after (right) VAE compression

Live lateral delay estimation enabled

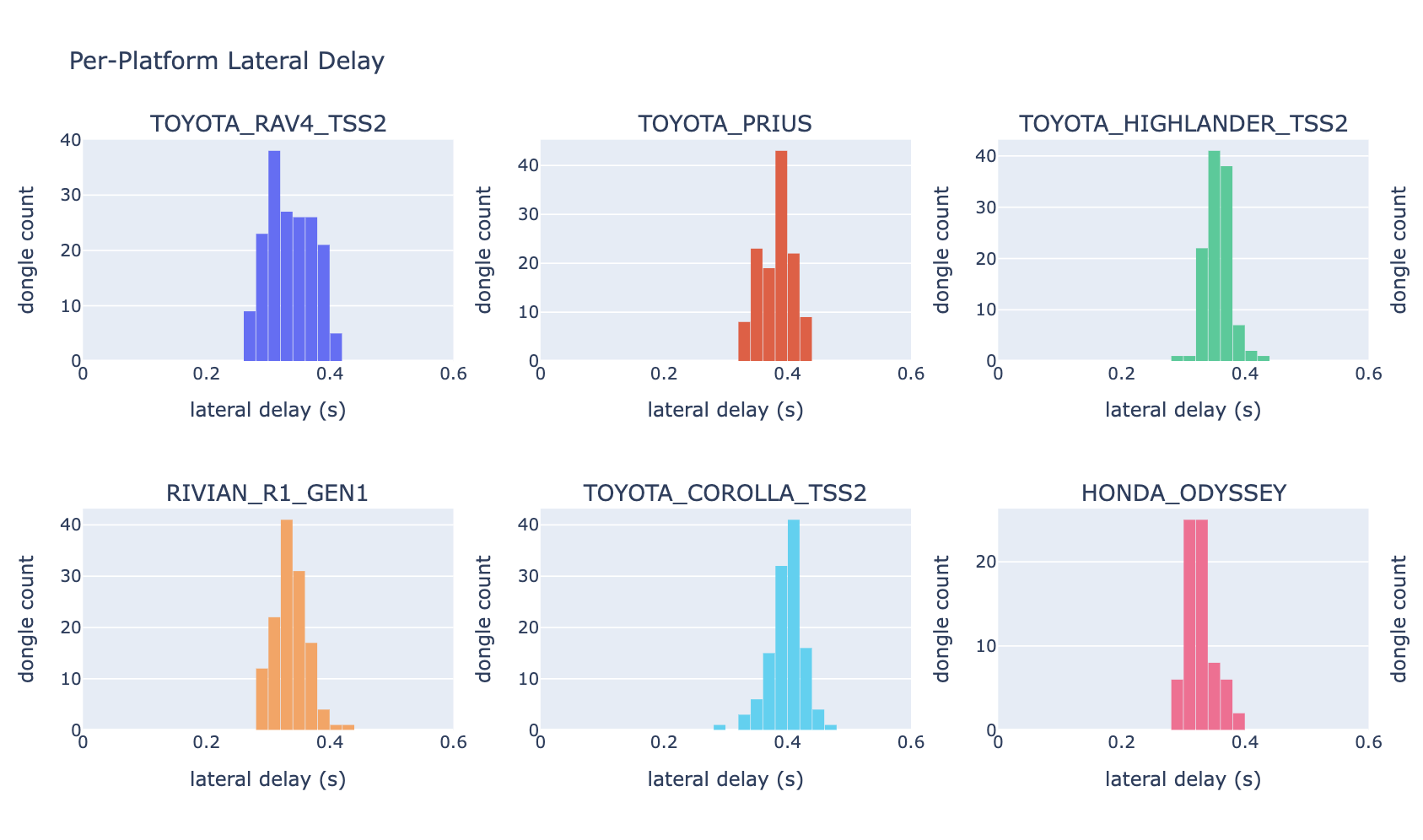

In the previous release, we added live lateral delay learning in shadow mode. After validating the data that came back from the previous release, this release enables the use of the live values, and we expect more accurate steering on some cars.

Lag values reliably converge for different cars

Community driving feedback

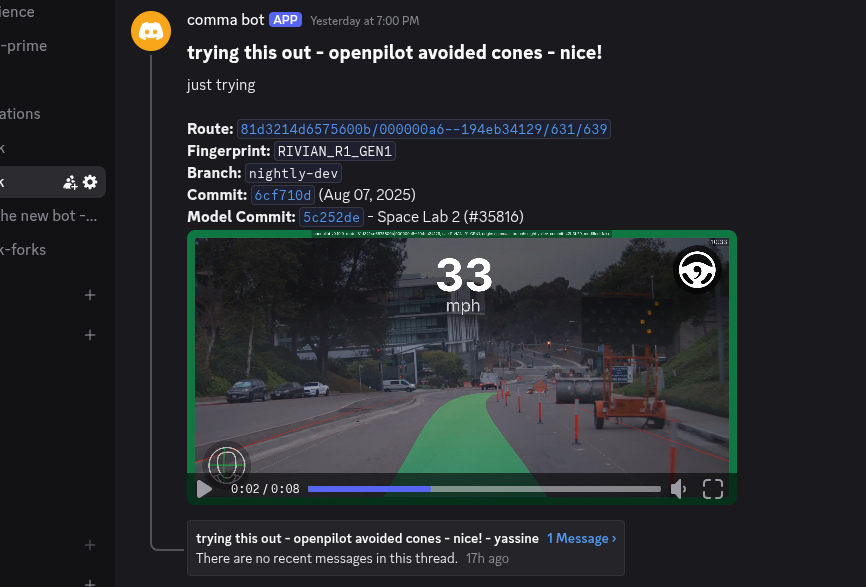

A good bug report is one of the best ways to contribute, and we’ve made it easier to share your driving feedback. Simply share the comma connect link and write up a short description, then our new Discord bot will prepare a complete report with a video clip for the team to review.

Clipping tool

The video clips in the #driving-feedback channel are generated with the new clipping tool, built by trey (#35071).

Try it out with the demo route or one of your own routes like this:

tools/clip/run.py --demo --output myawesomeclip.mp4

Dashcam audio recording (opt-in)

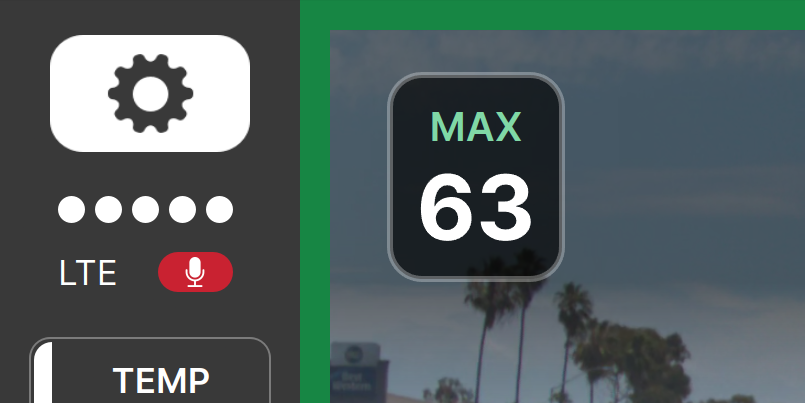

This release adds a new toggle which enables microphone recording to the dashcam video. openpilot encodes the audio together with the dashcam video, so it plays back in connect and in any videos you download.

When recording, a microphone indicator appears on the sidebar.

comma three moves to LTS

In order to focus all development efforts on shipping improvements to the comma 3X, comma three is moving to its own long term support (LTS) release track. For users on release, the updater will automatically migrate you to the new release-tici branch, where bug fixes will continue to be shipped.

Further docs can be found at the hardware repo.

Cars

commaCarSegments v2

We release an updated version of commaCarSegments, adding data from all the new car ports that came in over the last year. Check out the dataset on Hugging Face.

commaCarSegments v2 is out 🚗

— comma (@comma_ai) July 10, 2025

It's 3000 hours of CAN data from our fleet of 20k openpilot users all over the world.

Play with more raw CAN data from production cars than @Ford or @Rivian even have access to. Which cars have the best AEB? Road noise? Let's find out! pic.twitter.com/ZZhVboAhB9

Car interface slimming

This release removes a significant chunk of the work needed to be done when porting a new car or maintaining an existing one.

We removed unnecessary CAN signals that were previously required to be reverse-engineered, such as gas and brake percentages and wheel speeds. We plan to further reduce this surface area to the absolute minimum to improve maintainability as the compatibility list continues to grow.

The CAN parser was also rewritten entirely in Python (opendbc#2539) with automatic CAN message frequency detection (opendbc#2527) saving 700 lines (opendbc#2546) of car port code. The new CANParser also enabled us to pull out the car-specific checksum code into their respective brand folders (opendbc#2541).

Bug fixes

- Allow manumatic sport gear for Honda (#35911) and Toyota (#35696)

- Hyundai CAN FD: eliminate lateral steering wheel oscillation on the highway (opendbc#2591)

- Hyundai CAN FD: tolerate cruise button message occasionally dropping out (opendbc#2673)

Enhancements

- Ford Bronco Sport: reduce lateral overshoot at high speeds (opendbc#2430)

- Move full car unit tests from openpilot to opendbc (opendbc#2578)

Car Ports

- Acura MDX 2025 support thanks to vanillagorillaa and MVL! (opendbc#2129)

- Honda Accord 2023-25 support thanks to vanillagorillaa and MVL! (opendbc#2610)

- Honda CR-V 2023-25 support thanks to vanillagorillaa and MVL! (opendbc#2475)

- Honda Pilot 2023-25 support thanks to vanillagorillaa and MVL! (opendbc#2636)

Join the community

openpilot is built by comma together with the openpilot community. Get involved in the community to push the driving experience forward.

- Join the community Discord

- Post your driving feedback in

#driving-feedbackon Discord - Upload your driving data with Firehose Mode

- Get started with openpilot development

Want to build the next release with us? We’re hiring.

Leave a comment