openpilot 0.9.6

Driving model improvements

This release has two notable driving model improvements.

In the Blue Diamond model, we increased the number of unique images that are used to train the Vision Model, in addition to applying more regularization (weight decay) and removing the Global Average Pooling from the FastViT architecture. These changes noticeably improved driving performance, especially around desire situations such as exits and lane changes.

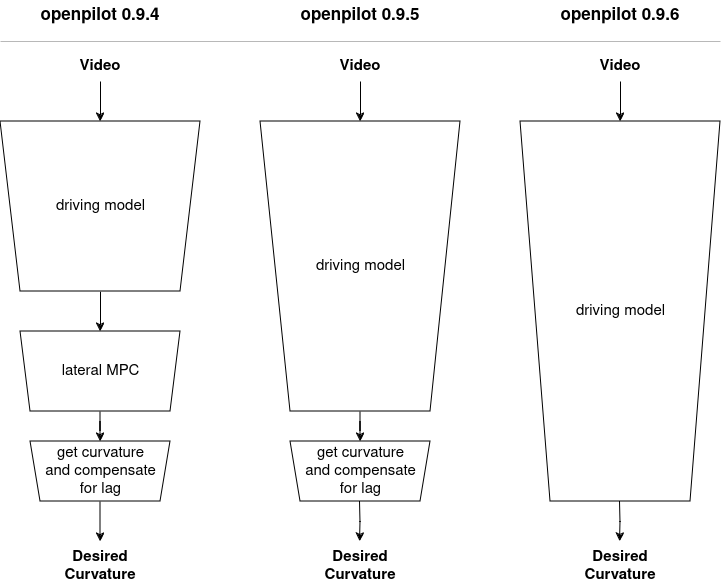

In the Los Angeles Model, we changed the interface between the model and the rest of openpilot. This model just outputs an action for the car to perform, in this case, reaching a desired curvature. This makes the API for lateral between the model and the control just a single value, which simplifies the code and allows the model to do more. This improves performance and prepares the architecture to more cleanly do RL in the future. All of these changes only apply to lateral; we will make similar changes to longitudinal soon.

Left: the driving model predicts a plan, which feeds into an MPC optimization algorithm, then gets converted into a desired curvature using classical approximations.

Center: the MPC optimization is learned and part of the driving model.

Right: the model now directly outputs a desired curvature.

New driver monitoring model

The new Quarter Pounder Deluxe driver monitoring model is now trained with a significantly more diverse dataset featuring 4x the number of unique users (3200+) compared to the previous model. This leads to improved performance for a wider variety of car interiors and drivers, while sensitivity of distraction alerts remains the same.

bodyjim

comma body finally gets a gymnasium environment API, which enables more effective RL research and simplifies robotic application development. bodyjim is a separate pip package designed specifically for the body. It works both locally on-device and remotely on PC over the local network. With just a few lines of code, it’s possible to fetch images from multiple cameras at once, stream arbitrary cereal messages, and drive around.

It’s as easy as pip install bodyjim and running this snippet on your PC:

import bodyjim

env = bodyjim.BodyEnv("<body_ip_address>", cameras=["driver"], services=["accelerometer", "gyroscope"])

obs, _, _, _, _ = env.step((1.0, 0.0))

dcam_image = obs["cameras"]["driver"]

accelerometer, gyro = obs["accelerometer"], obs["gyroscope"]

WebRTC streaming

Under the hood, bodyjim is powered by webrtcd, a new openpilot daemon, and our new teleoprtc package. bodyjim is just the first application for WebRTC streaming in openpilot. In the future, we can stream the cameras and microphone for a sentry-like experience.

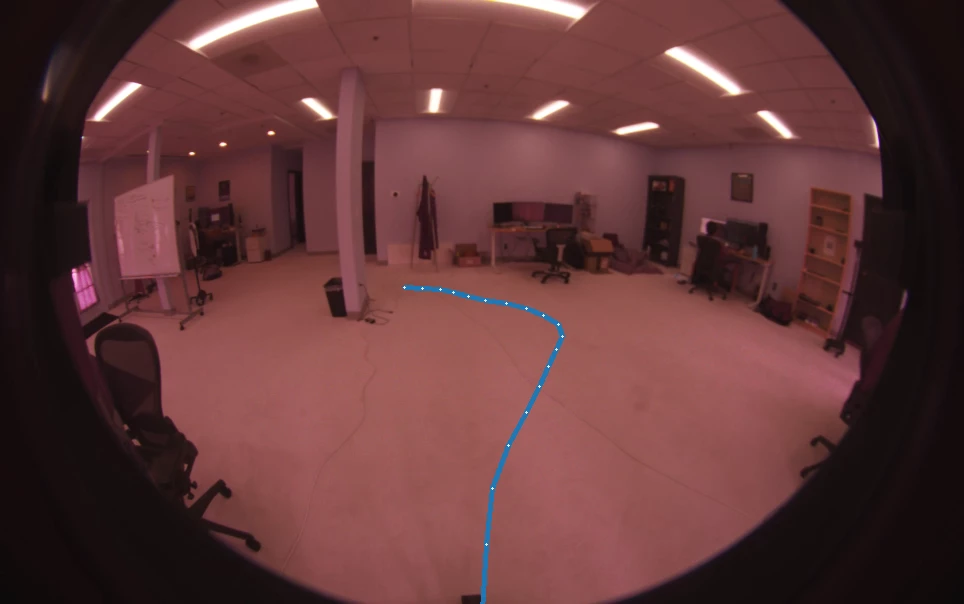

Being followed by a comma body, one of the example scripts in bodyjim

AGNOS 9

This is a minor AGNOS update that enables support for LightningHard, our own Snapdragon 845 based SOM, that will begin shipping in comma 3Xs soon. This update also includes:

- fixed bootsplash blink

- fixed rare audio playback issues

- reduced banding when recording the device’s display

- USB3 support for fastboot flashing

- device’s serial number in the hostname, e.g.

tici->comma-aeffe5d0

ML Controls

In the 0.9.2 release, we introduced a non-linear feed-forward function for the Chevrolet Bolt. Despite improving controls, this was not a scalable solution. It did not account for the dependence on speed and forward acceleration, among other factors. Even if we did account for all variables, it involved guessing a parameterized function first, and then learning the weights of the constants. And finally, it involved testing in an actual car ourselves, or asking users to try it out. Even if we did all this, there was still a possibility of not covering the entire spectrum of scenarios. This long and fuzzy loop prevented us from merging PRs with custom feed-forward functions, changes to weights that users said “felt better”, or even neural models like twilsonco’s NNFF since there was no real way to test them thoroughly.

ML Controls Sim

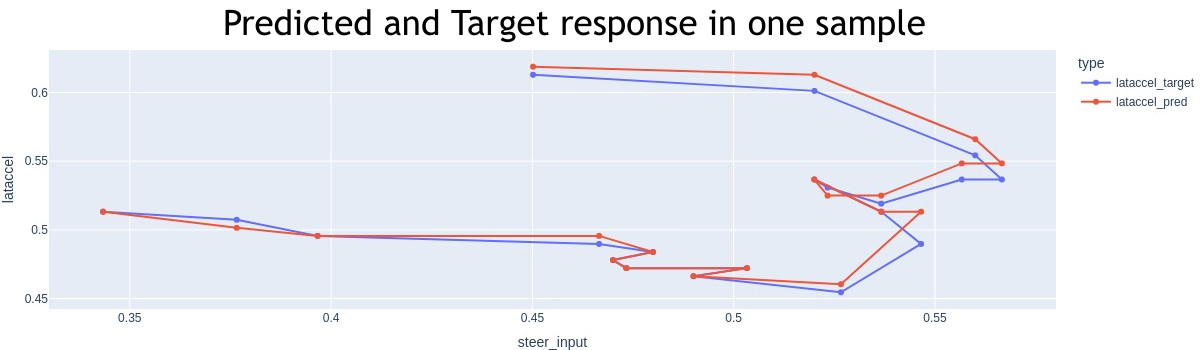

Prediction vs ground-truth in one sample (not autoregressive)

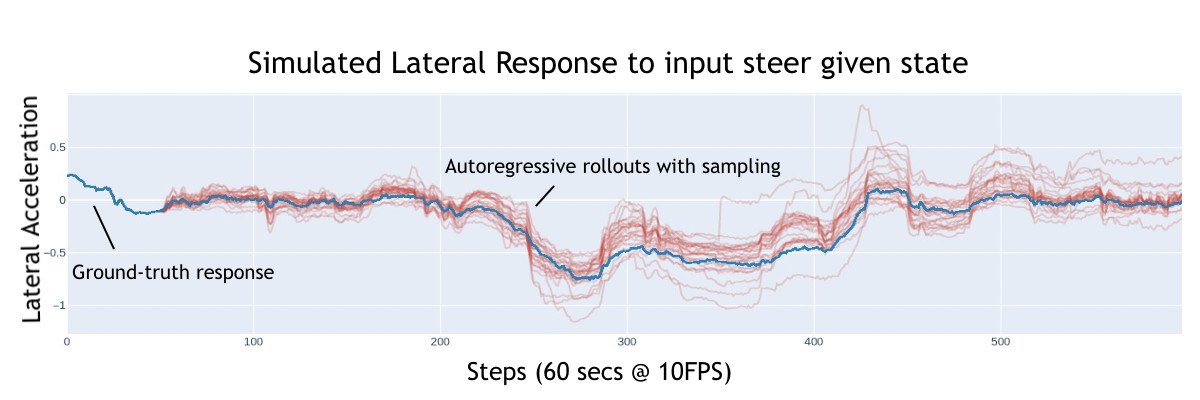

So, we developed the MLControlsSim. This is a GPT-2 based model that takes the car state (like speed, road roll, acceleration, etc.), and steer input to predict the car’s lateral response for a fixed context length. This model was trained with the comma-steering-control dataset. During inference, the model is run autoregressively to predict the lateral acceleration. This model, once well trained, can now be used in place of a car!

Multiple rollouts on one segment

Bolt Neural Controls

One simple way to verify that the controls simulator works as intended is to compare the ground-truth, linear and non-linear feed-forward functions in loop, in the simulator.

The controls simulator run in loop with different controllers

Now that we had our testing infrastructure in place, we trained a feed-forward function to predict the steer input required - a very simple 4 layer MLP.

feed-forward target and predictions

This model was tested in loop in our controls simulator, which shows that this is the best performing feed-forward!

Controls simulator run in loop with the neural feed-forward

Once we shipped this feed-forward function to the controller in master, we observed that the y0-prediction from the driving model (where the model wants to be at t=0) was better than the controller in release.

Distribution of y0 in turns

This is a very exciting first step in moving towards end-to-end neural controls for all platforms. This will firstly remove all guesswork from the process of learning feed-forward functions. But more importantly, it provides us with a framework to reason about and compare various algorithms - without having to touch a car. There are still outstanding issues, however. For example, the simulator does not model high-frequency oscillations correctly as they are mostly absent from training data (users do not keep openpilot engaged during oscillations, usually). Our current priority is to resolve these issues and release the platform-specific simulator models for experimentation by the community.

openpilot Tools

Segment Range

In this release, we introduce a new format for specifying routes and segments, called the “segment range”. Let’s break it down:

344c5c15b34f2d8a / 2024-01-03--09-37-12 / 2:6 / q

[ dongle id ] [ timestamp ] [ selector ] [ query type]

The selector allows you to select segments using Python slicing syntax. In this example, you will get segments [2,3,4,5].

Redesigned LogReader

This new format pairs nicely with our new LogReader, which is much simpler to use and can automatically determine the source to read from.

# get rlogs from segments 2->5

lr = LogReader("344c5c15b34f2d8a/2024-01-03--09-37-12/2:6")

# get the last qlog

lr = LogReader("344c5c15b34f2d8a/2024-01-03--09-37-12/-1/q")

# get every second qlog (Python slicing syntax!)

lr = LogReader("344c5c15b34f2d8a/2024-01-03--09-37-12/::2/q")

commaCarSegments

We also released a new open source car dataset, which includes thousands of segments with can and carParams data. The dataset targets 1000 segments per platform plus 20 per dongle ID, and the initial release contains 145595 segments. That’s 2500 hours of data from 3677 users and 223 car models. We plan to frequently refresh this dataset with new platforms as they become supported and expand it to unsupported cars that we have logs for.

We also released a few notebooks as examples of what we do internally to validate car changes. Validating changes takes the majority of our time when reviewing PRs, and this enables external contributors to do the same level of validation that we do. With the release of this dataset, there’s no longer a difference between an “internal” and “external” workflow for all car-specific work; we’re all working with the same code, data, and tools!

Cars

This release brings support for the Toyota RAV4 and RAV4 Hybrid 2023-24, which utilize Lane Tracing Assist, Toyota’s new angle-based steering control API. As done when supporting Ford’s curvature control, we took the opportunity to refactor and simplify panda safety as we went:

- Refactor to specify RX message frequency in Hz for readability (panda#1754)

- Refactor to de-duplicate RX and TX message checks (panda#1727, panda#1730)

- Add a safety code coverage report and assert full line coverage over entire safety folder (panda#1699)

- Add ELM327 safety tests (panda#1715)

- Add comma body safety tests (panda#1716)

- Support multiple relay malfunction addresses, in preparation for RAV4 alpha openpilot longitudinal (panda#1707)

We are also introducing mutation testing into panda, starting with a simple MISRA C:2012 falsification test (panda#1763). This will soon to expand to the car safety tests (panda#1755) to catch lapses of coverage in the tests that could lead to safety bugs.

CAN fuzzing test

openpilot and panda have duplicate code for parsing the car’s state and safety logic. This duplication allows the panda to enforce the safety model independently of openpilot, but it’s also surface area for bugs where openpilot and panda disagree on the car’s state. In this release, we add a test that fuzzes the CAN bytes and compares the parsed out car states to ensure consistency between openpilot and panda (#30443).

This new test caught several bugs:

- CANParser: don’t overflow invalid counters (opendbc#976)

- CANParser: don’t partially update message signals (opendbc#977)

- Honda Bosch: fix alternate brake address check race condition (panda#1746)

- Hyundai: fix brake pressed bitmask (panda#1724)

- Nissan: check bus for brake pressed message (panda#1740)

- safety: add comma pedal counter and frequency checks (panda#1735)

Enhancements

- GM: check blind spot monitors on newer models (#30861)

- GM: improve Bolt EV & EUV lateral control with neural lateral feed-forward (#31266)

- Hyundai CAN FD: enable alpha openpilot longitudinal for some ICE vehicles (#30034)

- Hyundai CAN FD: improve steering tune on Tucson 2022-23 (#30513)

- Subaru: enable alpha openpilot longitudinal for older models (#30714)

- Testing: parallelize panda safety tests (panda#1756)

- Testing: randomize subset of internal car segment list for

TestCarModel, more coverage (#30653) - Toyota: enable alpha openpilot longitudinal for RAV4 & RAV4 Hybrid 2022-24 (#29094)

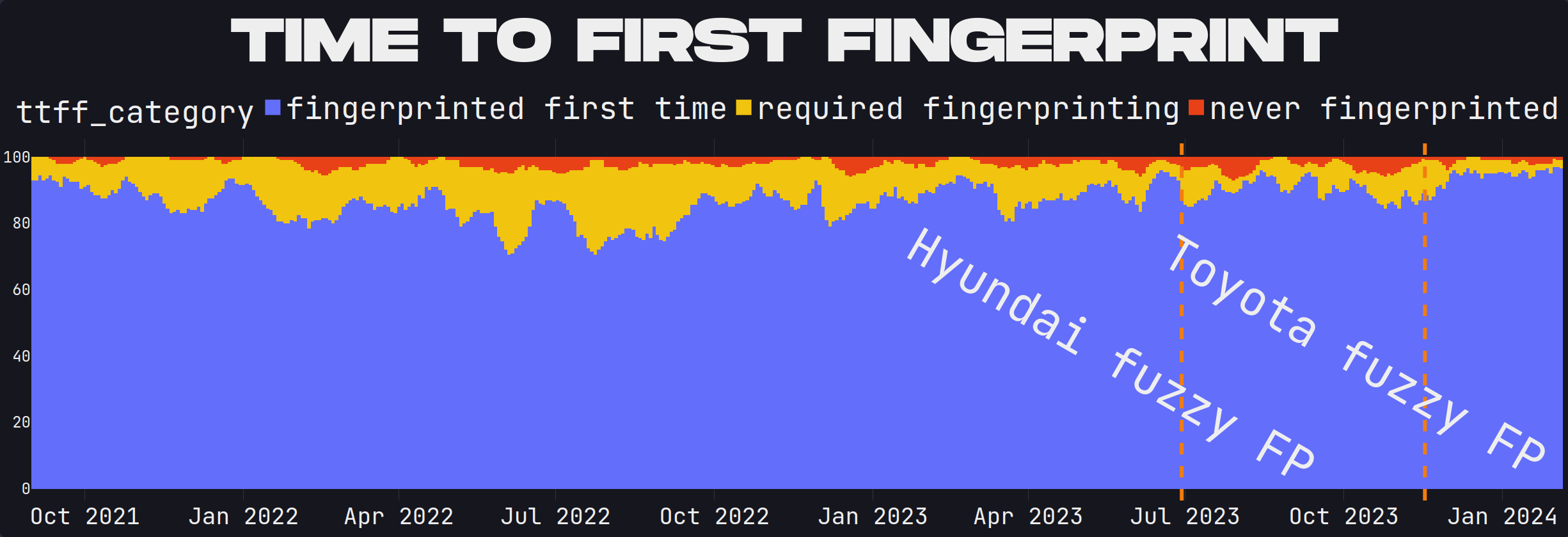

Fingerprinting

This release also heavily focuses on improving the plug-and-play experience of the comma 3X by removing the reliance on the OBD-II port for fingerprinting for many makes and models. We also further improved fuzzy fingerprinting for all Toyota models and select Hyundai CAN FD models, with more to come in the next release.

A daily rolling metric of the last 100 newly fingerprinting devices showing improvement in recent months

- Ford: fingerprint without the OBD-II port (#31195)

- GM: log camera firmware versions on newer-generation models (#31221)

- Hyundai CAN FD: merge some platforms (#31238, #31235) by detecting if the vehicle is hybrid (#31237), enabling improved fuzzy fingerprinting for newer Sorento models (#31242)

- Mazda: fingerprint without the OBD-II port (#31261)

- Nissan: fingerprint without the OBD-II port (#31243)

- Subaru: fingerprint without the OBD-II port (#31174)

- Toyota: remove an irrelevant ECU from fuzzy fingerprinting, improving identification of unseen cars (#31043)

- VIN: query without OBD-II port (#31165, #31224, #31308, #31348, #31398)

Car Ports

- Chevrolet Equinox 2019-22 support thanks to JasonJShuler and nworb-cire! (#31257)

- Dodge Durango 2020-21 support (#31015)

- Hyundai Staria 2023 support thanks to sunnyhaibin! (#30672)

- Kia Niro Plug-in Hybrid 2022 support thanks to sunnyhaibin! (#30576)

- Lexus LC 2024 support thanks to nelsonjchen! (#31199)

- Toyota RAV4 2023-24 support (#30109)

- Toyota RAV4 Hybrid 2023-24 support (#30109)

Join the team

Want a job? We love contributors and good solutions to our calibration challenge. We’re also offering a cash hiring bounty if you refer someone.