Crowdsourced Segnet (you can help!)

Although openpilot models are mostly trained end to end using the path the car drove, there are a few steps in our pipeline that still require knowing what things in the image are.

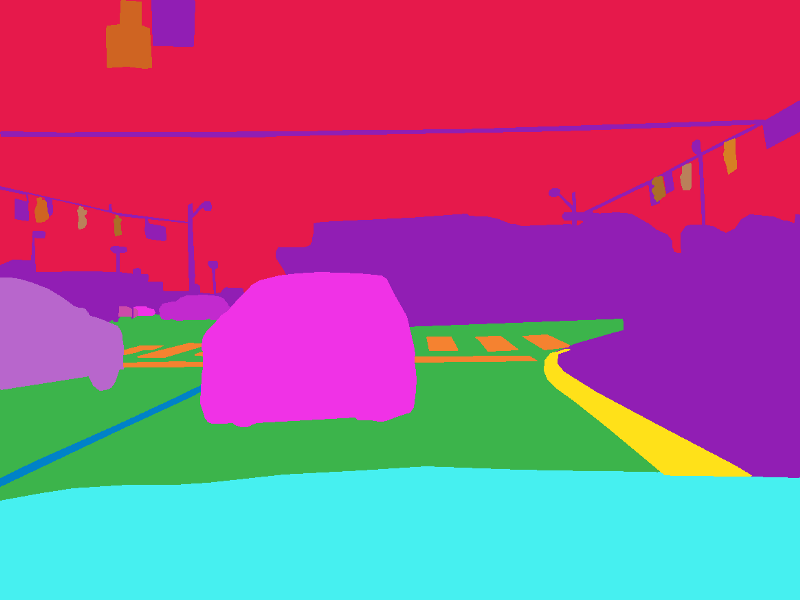

Unlike datasets like Mapillary Vistas and Cityscapes, we have many less classes, and we include much more diverse images (like night driving). Our segnet has 5 labels, in 3 broad classes.

- Moves with scene — Road, Lane Markings, Undrivable (including sky)

- Moves themselves — Movable (like vehicles, pedestrians, etc…)

- Moves with you — My car (and anything inside it) It’s important to filter out the latter two classes while groundtruthing posenets. And lane markings and roads are important for determining tracks of cars, for current models and potentially future HD maps.

Our current segnet is terrible

Okay, so it’s not usually quite that bad, but in pictures that look less than pristine, the segnet can do a really bad job. That is image 793 in the comma10k dataset.

Right now, you can improve it. Fork the comma10k repo and edit the mask png in whatever image editor you choose. Be careful to only use the 5 colors from the README. When done, submit a pull request.

Commercial Tools

We tried ScaleAPI and Labelbox. The Labelbox labeling tool is slow and hard to use, and we don’t see how it provides much value over the old commacoloring tool, never mind using Photoshop. And the one image we submitted to ScaleAPI came back incorrect, never mind the $6.40 price we paid for it. Tried their auditing, but no response yet.

The large vehicle in the center of the scene is marked “undrivable unmovable,” not a small oversight. The original image is here, 999 from the comma10k dataset. Note that we chose to use more than the 5 labels since ScaleAPI charges the same regardless.

We are unimpressed with the current commercial offerings.

A Continually Improving Dataset

Many of the existing self driving car semantic segmentation datasets have bugs, but because they aren’t on something like GitHub, there’s no way to fix them. We want to make a perfect dataset of 10k images, the comma10k, and forking and pull requests are a great way to do this. GitHub even has a very nice image diff tool.

For now, we have released 1k images in the repo. Let’s get these labelled, then we’ll train a segnet on the 1k to label the next 1k. Touch up those 1k, and after doing this a few times, the touch ups should get easier and easier. 10k pixel accurate images coming soon! We’ve seen huge success with crowdsourced open source projects like this in the past.

This dataset is MIT licensed, meaning it can be used for a huge variety of purposes. Let’s build the next dataset used for semantic segmentation benchmarks everywhere!

Help Out Today

Join our discord and come in the #comma-pencil channel. Procedures are being worked out to make this labeling as fast and as accurate as possible. Clone the repo from here.

We are hoping to have the first 1000 images labelled by 3/16 so we can move on to phase 2. If they are, we’ll have some prizes for the top participants. No purchase necessary to play, just pull requests!

You should already be following us on Twitter.

Leave a comment