RL for car controls

Can ML learn car controls?

A generic end-to-end ML solution to robotics requires the ML to learn everything between the sensors and actuators. There are many impressive examples of ML algorithms learning things such as robot dogs doing backflips, and humanoids doing a spinkick, but they typically rely on some classical low-level control like PID or MPC. These classical control algorithms work well when the system dynamics are known and fixed, but that isn’t always a given. To get the best possible robotics solution, we want a generic solution to automatically learn the most optimal control behavior.

In openpilot, where we control car steering actuators, these classical low-level controllers are the source of driving issues, and should be replaced with a more performant ML solution. Humans can drive a new car and learn its “feel” within seconds. An RL algorithm should be able to do the same, and that’s a necessary step towards the development of general-purpose robotics, which is what we are working towards at comma.

The controls challenge

Driving a car requires both prediction and control. In openpilot, a policy first predicts a desired trajectory curvature (AKA lateral acceleration) for the car to follow. To actually achieve this desired curvature, a controller must apply steering wheel torque. Knowing how much torque to apply is a hard controls problem which depends on speed, road roll, tire stiffness, and various other factors. A PID can do a decent job, but it’s imperfect and introduces noticeable driving issues in openpilot. For example assuming linear relationships between torque and lateral acceleration produces poor controls for cars where this assumption doesn’t hold. We show this is the case for the Chevrolet Bolt EUV in our 0.9.2 release blog post.

The goal of the controls challenge is to find better controls solutions. The challenge uses a GPT-based simulator to simulate a car’s steering responses. This is setup as a classical reinforcement learning task; given desired lateral acceleration and car state , find the optimal steer action . A generic RL solution should be able to outperform any hand-coded solutions, but so far no generic ML solution has gotten a competitive score.

We spent some time on experiments using RL to solve the controls challenge. Just like in RL, in research we must be able to iterate fast, and have reliable feedback signals. To run experiments we make a minimal environment which we can then incrementally make harder to model the controls challenge.

A simple toy problem

A good first step is for RL to move a simple cart. CartLatAccel is a simple Gym environment which uses basic cart kinematics, with added noise and trajectory following. Think CartPole, but without the balancing. You apply an acceleration to the cart left or right, and the objective is to follow a 1D trajectory. This is a trivial problem without noise, but when you add lag and noise it can quickly become hard and interesting.

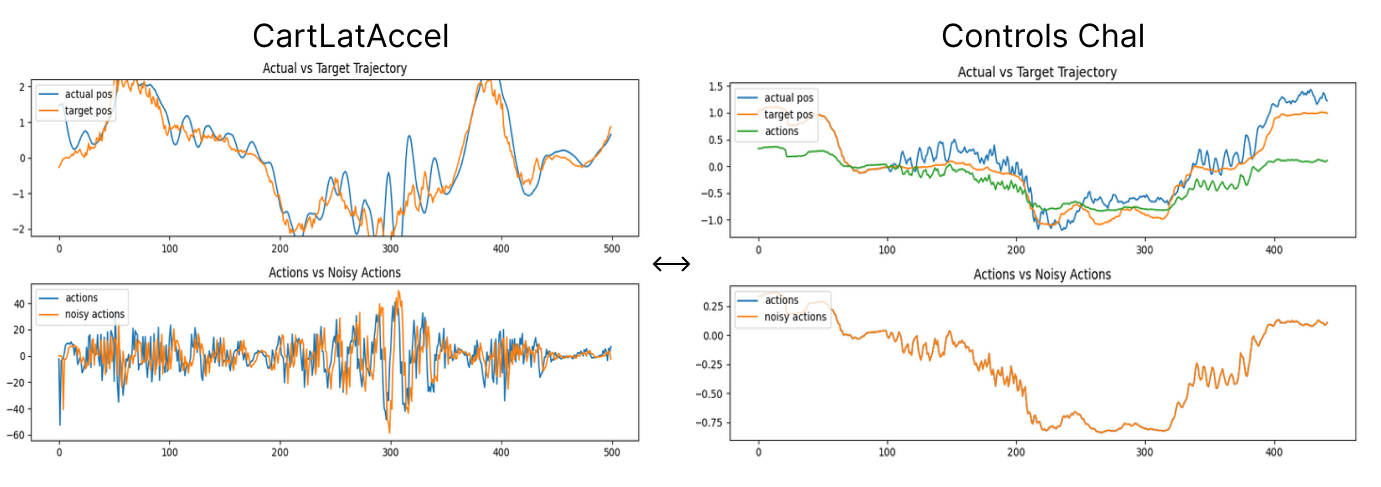

Rollout of an agent in CartLatAccel toy environment

An on-policy RL algorithm (such as PPO) learns a policy which associates specific state action pairs to outcomes. In contrast, evolutionary methods don’t learn based on individual actions, they explore in the parameter space of the policy instead of the action space.

We try CMA-ES as an evolutionary search algorithm, and find that it can solve this task. It is robust to noise, but far less sample efficient than PPO. Our PPO algorithm learns to solve the task in <1s (50K rollouts, ~1M steps, batch size 1K). Not bad for RL learning a (very) simple task!

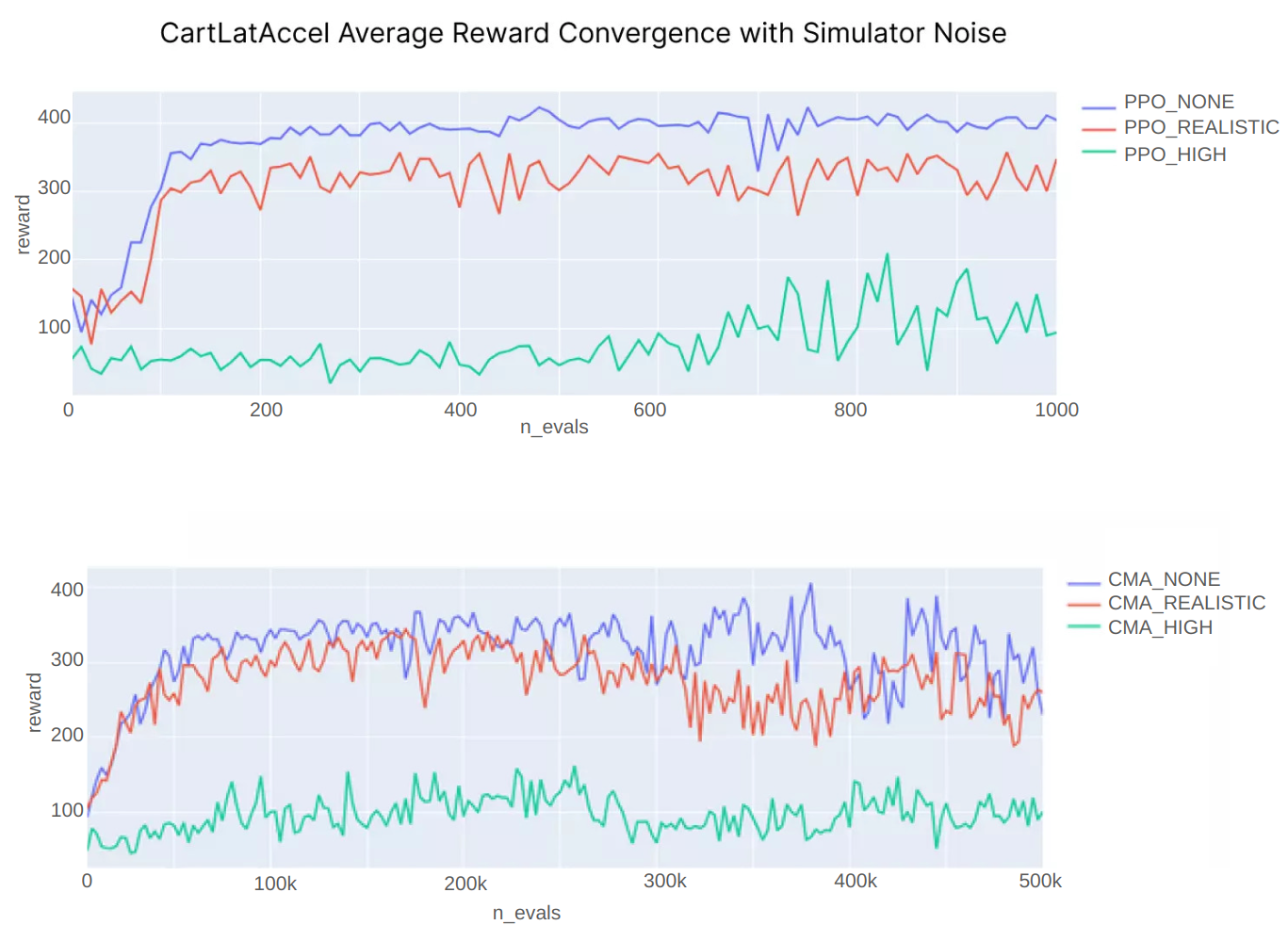

CMA-ES and PPO convergence with different simulation noise settings. REALISTIC noise introduces a realistic level of lag and time-correlated noise, while HIGH noise is catastrophic and makes it impossible to closely follow the trajectory. CMA-ES takes 500x longer than PPO to converge (500K vs 1K evals)

We make the problem harder by adding noise, action clipping, and a jerk cost to penalize large changes in actions. We use entropy based exploration with a Beta distribution to keep actions within the steering range while exploring the parameter space. We manage to get PPO to perform well on this problem as we increase difficulty, until it looks similar to the controls challenge.

Making CartAccel harder to look like controls challenge

Evolving a generic controller

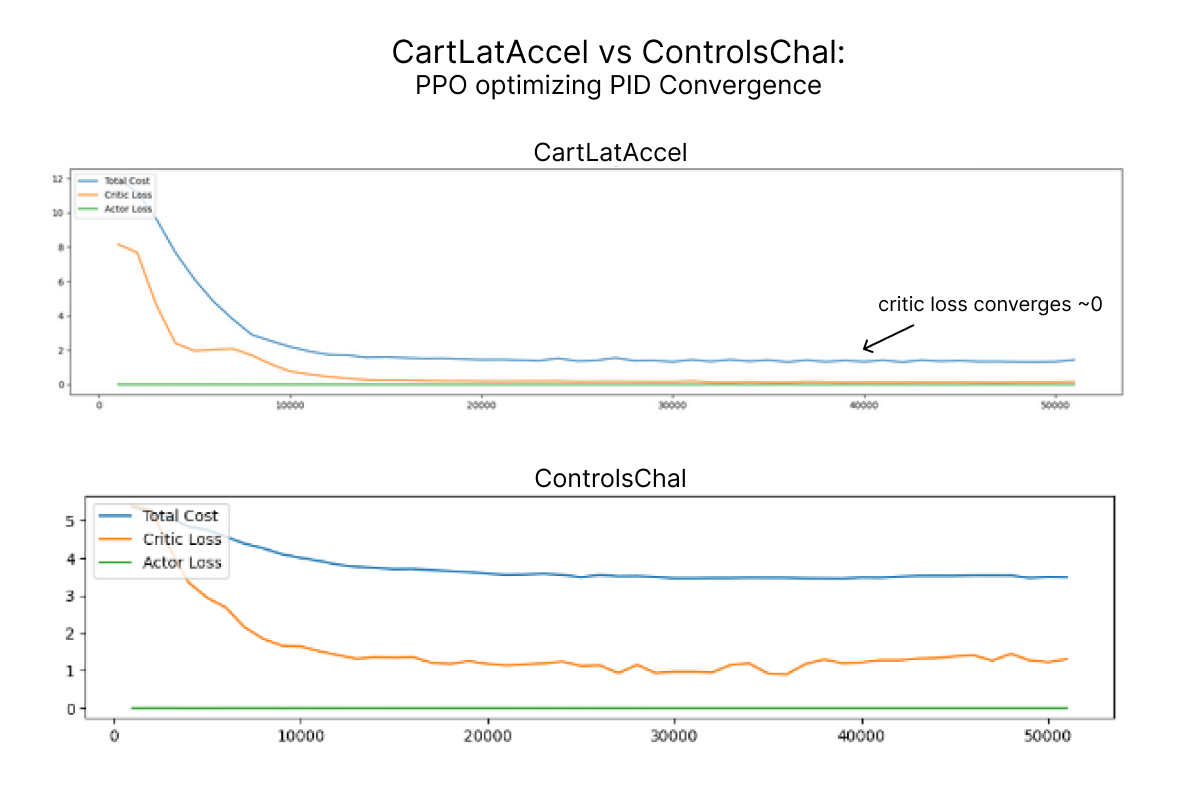

Applying optimized solutions to the controls challenge, we find that the success of our PPO algorithm doesn’t translate well, but evolutionary methods can still find good solutions. PPO improves over the course of training but doesn’t converge to good values. It’s not obvious why this problem is so much harder than the simple cart example with noise, but it is likely related to the more complex noise and sampling that is introduced by the GPT-simulator. The noise can contain intricate temporal correlations since the GPT takes in the previous noisy samples as context.

PPO converges to optimal PID values on CartLatAccel but not on controls challenge

Evolutionary methods operate on the parameter level, and don’t require a gradient signal. Optimizing on the parameter level also turns the problem from Markovian state transitions to a contextual bandit problem, this framing makes it similar to single-step RL problem. Rollouts only consist of one action (current guess for parameters) and one reward (based on the average cost in that rollout).

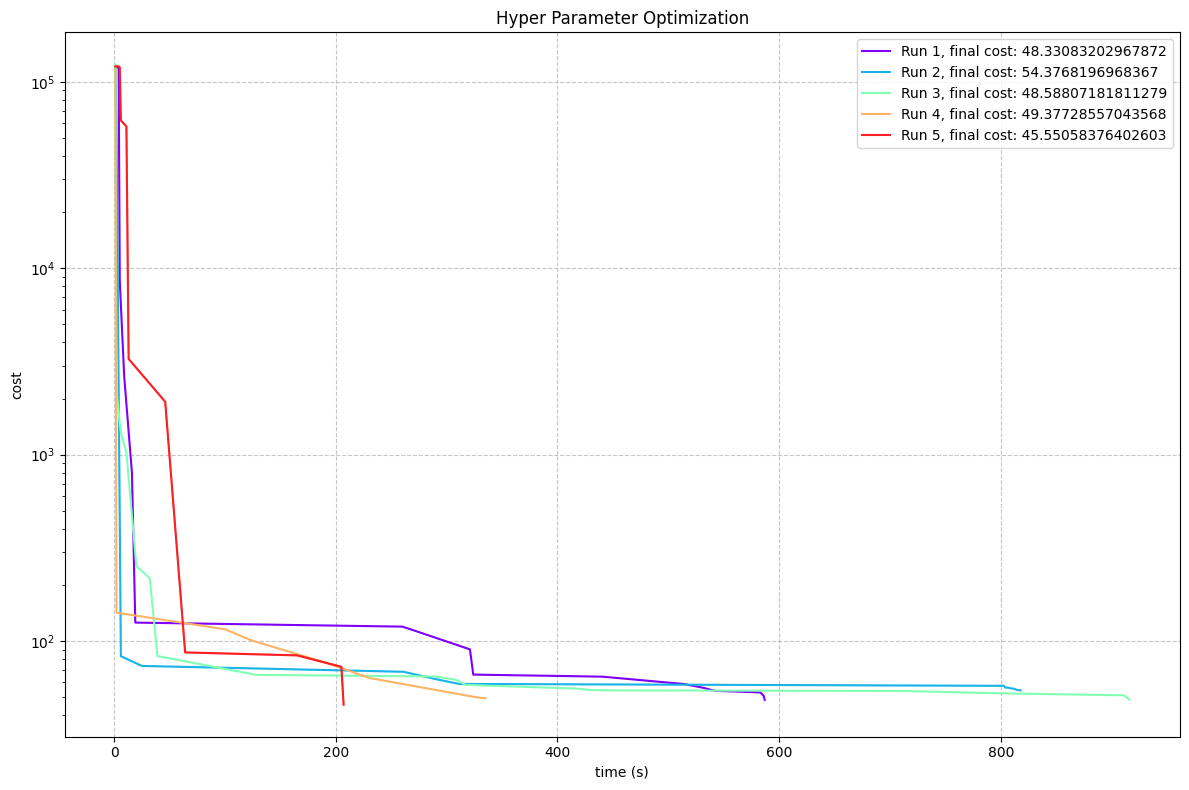

CMA-ES converges to optimal PID solution with 20 segments over 5 training runs, total time <10 min (1.78evals/s = 9 min)

By adding on more inputs, we can evolve a tiny, feature-rich controller using evolutionary search to reach the best yet solution. CMA-ES converges to a cost of 48.0 on the controls challenge, by optimizing 6 parameters of a custom feedback controller.

The future

Modern RL doesn’t yet appear to be robust enough to converge reliably in noisy environments. Famous successes with RL have been in games such as Chess and Go, where there is a clean reward signal, and the state space is discrete. There is no realistic continuous noise in these problems. When acting in the real world, this noise is an essential part of the problem.

When humans drive a car, we can learn from direct experience, similar to on-policy algorithms. We can learn in a noisy environment with high sample efficiency. The question remains when RL techniques will learn to do the same. Imagine an adaptable controller which can learn to drive your car in a few minutes, just as you would. That’s where RL and robotics is heading. For now the controls challenge remains a good benchmark of what ML can not yet do.

Ellen Xu, Autonomy Intern @comma.ai

Leave a comment