Self-Driving Car For Free

Meet your cheapest hardware yet, the comma zero, aka webcams + laptop + car harness + black panda (only needed if you want to control a real car). We mentioned our theme for 2020 is externalization, and this is our latest progress on that.

Overview

Until now, the easiest ways to experience chill driving are: buy a Tesla to use autopilot, or be sensible and get an EON/comma two from us to run openpilot on a supported car you probably already own.

But what if you are not ready for that commitment, and are simply curious and want to see the openpilot magic in action? Fear not, we have made things easier and more accessible than ever.

Let’s first take a step back and see what openpilot needs. For sensors, openpilot relies mostly on a forward facing camera to see the road and a driver facing camera to see the driver. The comma two uses an IR camera to see the driver at night, but besides that there is nothing particularly special about these cameras.

To control a real car, a physical interface between openpilot and the car is also needed, such as the car harness + black panda. Other than sensors and a car interface, all that openpilot needs is a linux platform with sufficient compute. And so we made openpilot work on an Ubuntu laptop connected to 2 commodity webcams.

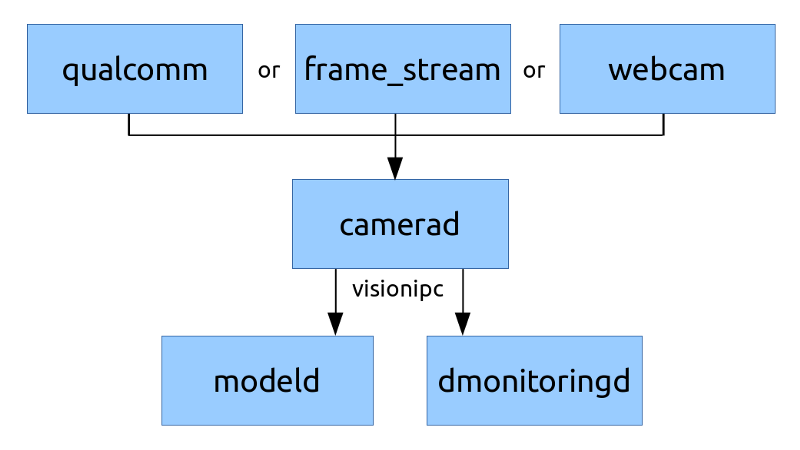

How it works is pretty straightforward: a new camera called “webcam” is added to camerad. When openpilot is built using the webcam flag, it will automatically replace the default qualcomm cameras with webcams connected to the computer. That’s it!

The setup

Let’s get right into the action! Follow the instruction here to setup your webcam enabled openpilot. Below is the hardware prototype we built (you are very welcome to help improve it!).

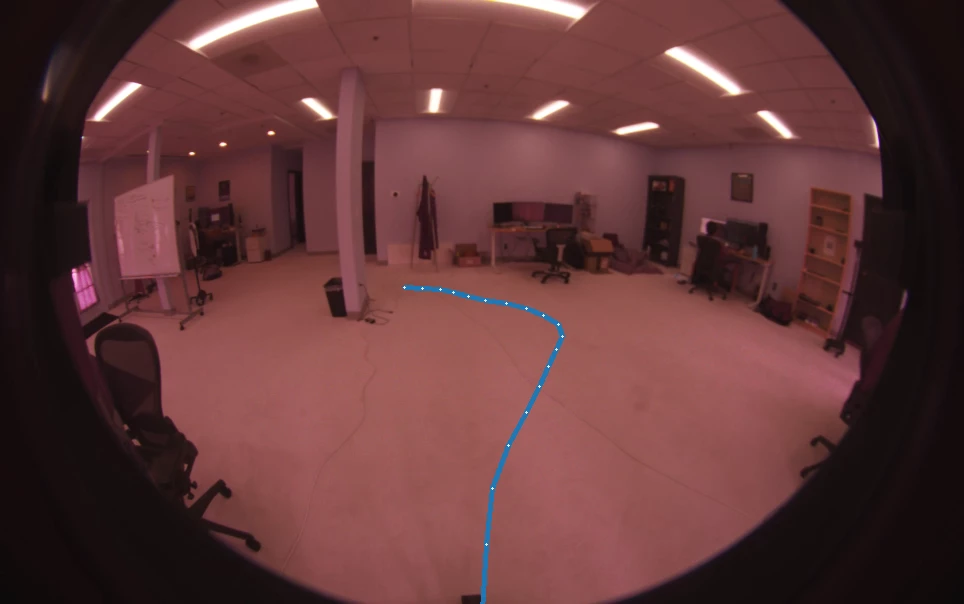

The drive

So how was our test drive? After a short session of calibrating the camera, openpilot was successfully engaged! Check out the animation at the beginning if you haven’t done so, or just watch it one more time. Here are two more demos, for lane change assist and driver monitoring.

Caveats

Openpilot was optimized for EONs and comma twos, the webcam port serves as proof of openpilot’s platform independence. However, unreliable USB connection, and wobbly webcams are a serious concern. This setup should only be used for experiments in a safe, closely monitored environment.

It is also important to note that the webcams are different from the cameras openpilot’s models were trained on. So it is expected that openpilot will perform significantly worse with this setup. If you just want a good openpilot experience, we recommend buying the comma two.

Going beyond

With this port, we show that openpilot does not rely on a specific sensor suite to work. A camera and some compute is all that is absolutely required. Openpilot already supports a wide range of vehicles and environments, but what is beyond that? Virtual cars and virtual worlds.

Humans actually do that a lot — racing games and driving simulators! Other than pulling off boring commutes and long road trips, openpilot should (theoretically) also be able to drive in any sufficiently realistic simulated scenes, like GTA and Forza.

Here’s something simple to get started with: CARLA the simulator. When we held the 48-hour comma hackathon back in February, among the exciting projects there was a challenge to make openpilot drive in CARLA for as long as possible without crashing (car crashes or software crashes). The API to inject openpilot controls into CARLA has been written and tested but currently it only supports taking the image array directly from the CARLA engine, which might contain detrimental CG artifacts. Therefore, replacing this part with the webcam is a good place to start. Openpilot’s neural network expects consecutive frames with natural movement, so it is recommended for the graphics to be running at at least 60FPS.

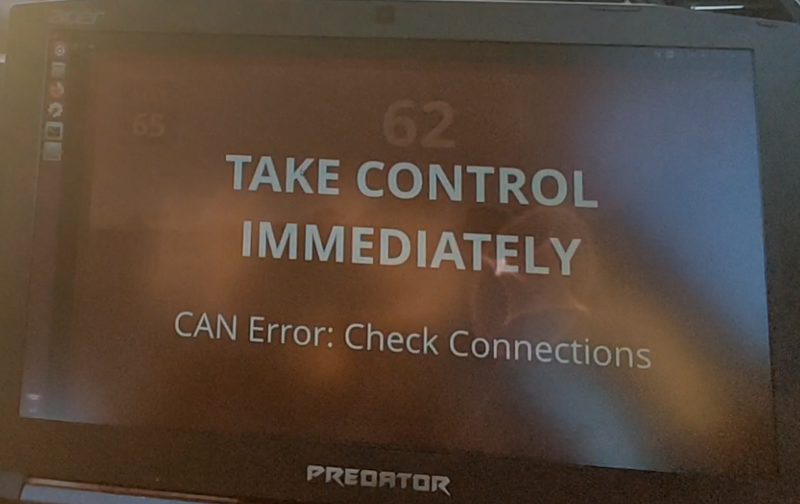

CARLA is too easy? Now the real fun begins — try to make openpilot control the car in your favorite game, with a webcam pointing at the screen. We can’t wait to see someone twitch stream openpilot driving in EuroTruck for 24 hours.

Written by: Weixing

Join us

In case you did not know yet, comma.ai is hiring! Check out the job openings at our beautiful San Diego office and take the programming challenge.

Last but not least, remember to join our discord and follow our twitter to keep up with the game!