Autonomy

The autonomy team’s goal is to build robotic agents. Agents that are open-source, useful, and that you can fully own. Our main product and current focus is building the world’s best ADAS system. But the methods and strategies we are using to solve these problems are generic, they are not specific to driving.

A comma three driving a car to taco bell fully end-to-end

Self-driving is a great place to start if you want to develop general-purpose robotics. A car is a robot with pretty simple actuators, and it is easy to collect data showing what good driving looks like. Most importantly, partial self-driving is useful and something people want to pay money for. Humanoid robots are exciting, but incredibly difficult. There are many useful intermediates that must be achieved before useful humanoid robots as a product are realistic, companies that don’t recognize this will fail. Just as overly ambitious self-driving car companies failed over the last decade.

Solving self-driving cars with generic methods

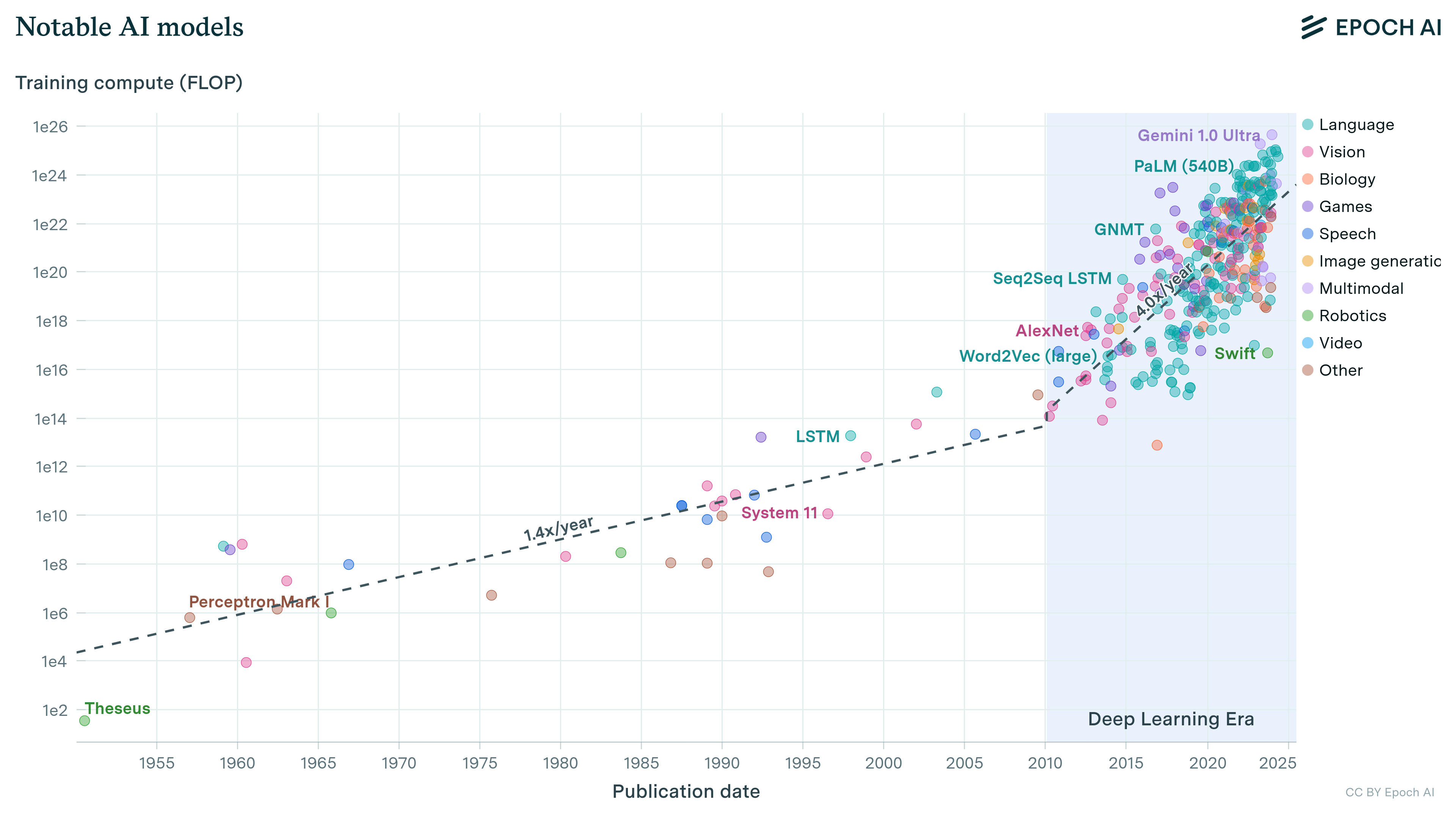

comma was founded on the idea that generic learning methods are superior. The bitter lesson in ML is that we must make methods that learn how to discover, not contain what we have discovered. In a world where compute continues to increase exponentially, methods that scale with compute will outcompete methods that don’t.

Exponential FLOP usage of models

In practice, a generic strategy for autonomous driving means making a driving agent that learns how to drive by observing human driving behavior. We have collected millions of miles of driving data of humans driving, we just need to have an ML system learn how to drive like them. This is a dream comma has had since the beginning. But what was once a dream is now reality. Today openpilot drives over 50% of miles of our fleet of 10k+ devices, all while it was never trained to stay between lanelines or follow any particular rules, but still learned how to drive. This has been true for a couple years, but we are now again at the cusp of another breakthrough. In this blogpost I’ll talk about what we’ve achieved, and what’s still coming for the autonomy team.

What we’ve achieved

End-to-end lateral

openpilot has always predicted the path the human would drive. But just learning the most likely human path off-policy is not enough for the car to drive well. The car will just drift out the lane, something we’ve discussed several times. For most of the early years at comma lanelines were still used in openpilot to make the car stay in lane and the end-to-end path was only used in situations where the lanelines were unclear. March 2021 is the first time we shipped models that could do steering control well without being told to stay between lanelines, but it was released under an opt-in toggle. In July 2022, we finally released end-to-end lateral as the default.

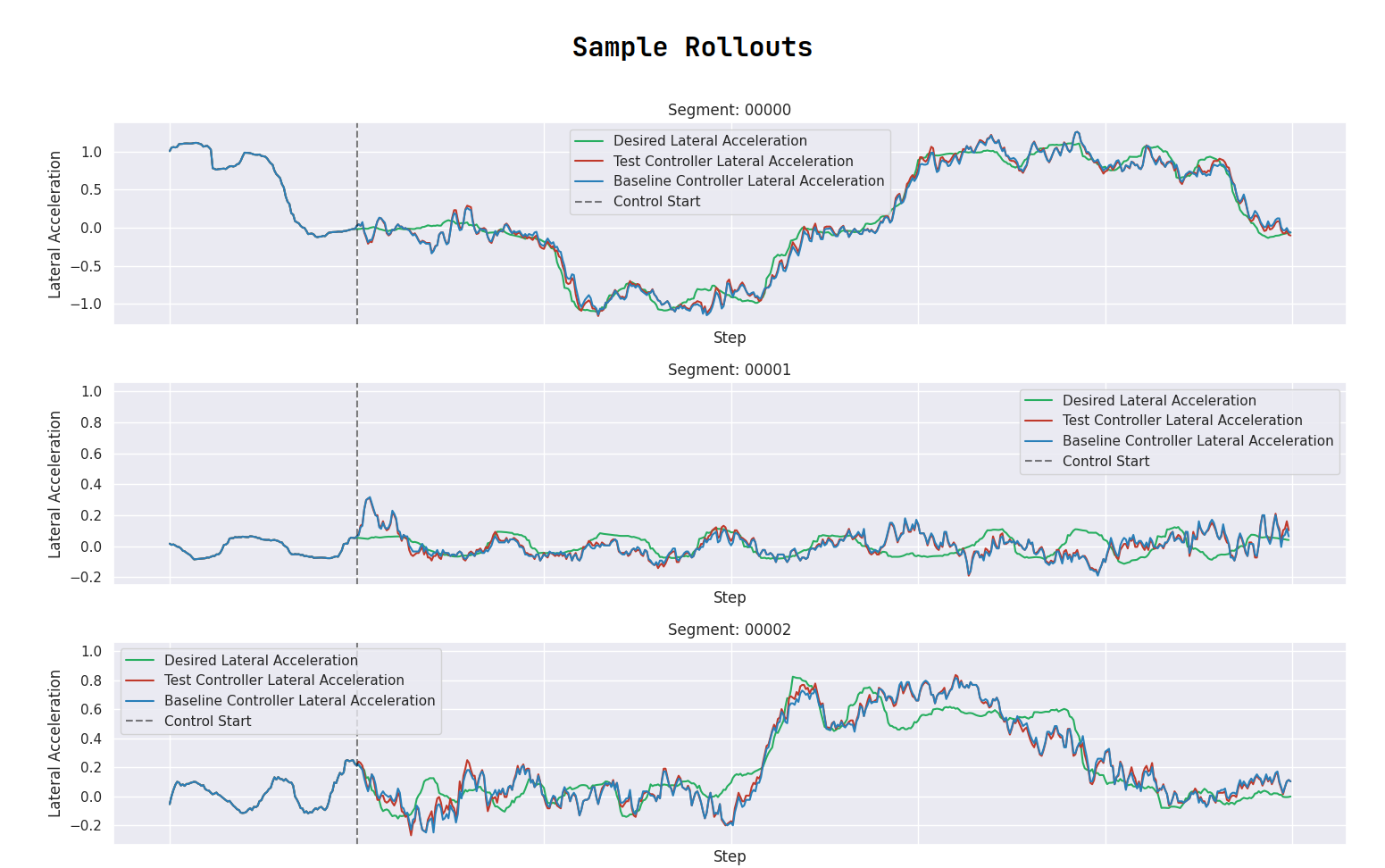

Driving models need to learn on-policy in a noisy simulator to drive well

End-to-end longitudinal

openpilot had used a pretty classical radar policy for longitudinal since the beginning, a combination of model predictions and radar measurements would tell the car to slow down or speed up just like your car’s ACC. We’ve increasingly relied on the ML model’s predictions, but what is shipped in release today is still fundamentally this same approach. In November 2022 we shipped end-to-end longitudinal behind an experimental toggle, where it has remained since.

We will be driven to Taco Bell:

— comma (@comma_ai) February 19, 2022

• without a disengagement

• on a comma three

• in a stock, production, car

• using open source code

• by the end of the year#level2 #adas #leftturn #rightturn #stoplight #fsd pic.twitter.com/5VpXjchhM5

End-to-end nav

By the end of 2022 we also hit our goal of driving to Taco Bell. To get to a specific place we need to tell openpilot where you want to go, otherwise it would just drive on the same road forever. We wanted to use an end-to-end approach, so we just put the video-stream of turn-by-turn navigation into the driving model so it knows where you want to go. We released this feature in experimental mode in 2023, but ended up removing it because it was slowing down our progress in shipping improvements to the longitudinal policy, which is our biggest priority. We will add it back once a lack of navigation becomes the majority of our users’ disengagements.

What’s still coming

World model as a simulator

Our biggest obstacle over the last couple of years has been the quality of the simulator we train the driving models in. Any imperfections in that simulation will cause issues in training. Our simulator, that works by estimating the depth of the scene and reprojecting the scene, has some fundamental issues. Depth estimation is hard, not all pixels have a depth (like reflections), and it doesn’t answer the question of what to inpaint in areas that you couldn’t see in the original view. All these issues have been limiting the quality of our driving models, so we need a new simulator. The solution is using a generative model to simulate driving. A simulator like that will have less predictable artifacts, and requires less assumptions about the world (like depth, flat road, etc…), and so is also more generic.

A driving model learning in learned simulator

We’ve had success with this approach in the last couple of years and have since recently been driving on models trained in this simulator. It’s even available for people to try. We expect these models to improve quickly, and be a revolution in the quality of openpilot’s driving. You’ll hear more details about this simulator soon from our Head of ML. We intend to ship a model trained in this new architecture before end of October 2024.

The need for RL

We managed to make openpilot drive end-to-end, by learning on-policy in a learned simulator. But that simulator is trained off-policy with a one-step assumption. This means that during simulation rollouts, the model is exposed to generated data, which may not fully be captured in the training data. This effect can cause simulations to diverge, especially longer simulations. To fix this the model should be trained by rolling out entire simulation sequences, and rewarding the model for producing realistic rollouts. This on-policy training combined with optimizing a non-differentiable reward makes it a Reinforcement Learning problem.

The comma controls challenge is a difficult RL problem

The policy models could also benefit from RL training. The policy models are trained on-policy to output human-like trajectories for curvature and acceleration. Low-level feedback controllers then try to achieve those trajectories. These low-level controllers are not perfect, and cause issues with driving performance. We’ve made a lot of progress making our controllers smarter, but for optimal performance the controllers themselves must be learned too, for which we again need RL.

RL solutions are in their infancy, and have had little practical success. We expect this will change soon and solve these issues. Eventually we will also allow on-device RL training. This will let openpilot learn to understand the differences between individual cars and control them optimally, as well as learn from disengagements to improve over time.

The future of robotics

If you look at the dates in this write-up you’ll notice that all achievements happened in the last couple years. That is despite comma working towards them since its inception. Here is George describing almost exactly what we ended up shipping in 2015. We’ve prioritized development speed for several years and are now reaping the rewards. I hope you can share our sense of optimism about this clear acceleration.

Your future household robot?

The methods we use are not specific to driving, they are generic learning strategies that will scale to all of robotics. And they will be useful to create robots that do your dishes and your laundry. When those robots do eventually come, we want to make sure there are some that you can buy that run open-source software. A useful robot that is not owned by some corporation, but truly belongs to you.

Harald Schäfer,

Head of Autonomy @ comma.ai

Leave a comment