openpilot 0.9.0

Every release openpilot feels a little more alive, and that’s more true for this release than ever. We’ve previously shipped end-to-end lateral planning from the model, and now we’re beginning to ship end-to-end longitudinal planning. In this release, we introduce experimental mode, where we enable alpha features that are not yet ready for regular openpilot.

openpilot waiting at a red light - by DALL·E 2

Taco Bell and beyond

Our goal for openpilot is to build a superhuman driving agent. We want to do this by letting a machine learning model learn how to drive like a human, by observing human driving behavior from our fleet. We call this approach end-to-end. We consider this problem to have three main components: end-to-end lateral planning, end-to-end longitudinal planning, and finally navigate-on-openpilot. Navigate-on-openpilot involves feeding in navigation data of where you want to go, so that openpilot can take the correct exits and turns. Those three components, with enough iteration and refinement, are all that openpilot will need to be able drive almost anywhere. It should, for example, be able to drive to Taco Bell from our office.

We will be driven to Taco Bell:

— comma (@comma_ai) February 19, 2022

• without a disengagement

• on a comma three

• in a stock, production, car

• using open source code

• by the end of the year#level2 #adas #leftturn #rightturn #stoplight #fsd pic.twitter.com/5VpXjchhM5

Experimental mode

Previously, we’ve successfully shipped end-to-end lateral planning. First, it was shipped behind a toggle, and later as the default. Now, we introduce a toggle again for experimental mode. Alpha features will be active in experimental mode before they’re ready for chill mode. In this release, experimental mode will activate end-to-end longitudinal planning. This means that openpilot will drive the speed that the model thinks a human would drive; this includes slowing down for turns, stopping at traffic lights, going for green lights, stopping at stop signs, and generally driving a speed it deems appropriate for the scene. The set cruise speed will only be used as an upper bound and not a target. The lead policy is also still active, so openpilot will maintain a similar distance to leads as it normally would.

In the future, navigate-on-openpilot will first be rolled out in experimental mode. Over time, end-to-end longitudinal features, such as accelerating faster in some situations, will graduate to chill mode.

Remember, this is an alpha feature, and frequent mistakes are expected. If you want a reliable and mature experience, experimental mode is not for you; stick with chill mode instead. As always, you must stay attentive and be ready to take over at all times.

New driving model

Thanks to simplifications in the training stack, a bigger datacenter, and using GPUs for simulator rollouts, training a model from scratch now takes 36 hours! Previous releases’ models were trained in around a week.

New information bottleneck

In “End-to-end lateral planning,” we explain the need for an information bottleneck to prevent “cheating.” Previous models used a KL divergence loss between the feature vector and an non-informative prior (unit Gaussian) as the information bottleneck. This approach required careful tuning of the weight of that loss, and only constrained the information flow on average over training samples.

In this release, we introduce a different bottleneck which explicitly bounds the number of bits passed through the bottleneck. Using additive white gaussian noise in train time, the bottleneck can be viewed as a Gaussian channel, which has a per-sample information capacity . The same bottleneck is used for all driving outputs (longitudinal and lateral), and we tuned the SNR to have a capacity of approximately 700 bits per frame.

The implementation of this layer in PyTorch is as simple as:

x = torch.nn.functional.normalize(x, dim=-1)*sqrt(SNR*x.shape[-1]) + torch.randn(x.shape)

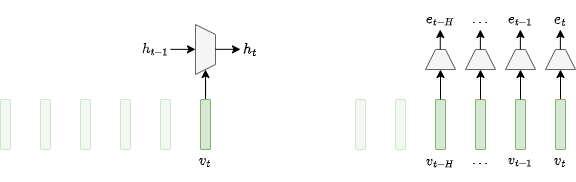

New temporal summarizer

We also transitioned the model’s temporal summarizer from a GRU to an explicit linear encoding with fixed length history (closer to an attention layer). GRUs have a strong inductive bias towards learning a temporally compressed representation which is fed into the next layers. This causes lag and general slowness of reaction to sudden changes. The new temporal summarizer takes a fixed length history chunk input, and embeds each feature vector into history embedding vectors which are fed into the next layers. Using this new temporal summarizer made the model more snappy and less prone to cheating.

Left: RNN temporal summarizer used in previous models. Right: Fixed length temporal summarizer used in 0.9.0

Depth reprojection in simulator

The driving models are trained in a new simulator using “depth reprojection,” which uses an approximate depth map of the scene to synthesize new views from different positions. We use different depth estimation algorithms in testing to validate that the model is not cheating using depth artifacts.

Simulating lateral and longitudinal movement in the simulator

Lateral and longitudinal training

Last but not least, the model’s training now includes both lateral and longitudinal planning. This enables the model to learn longitudinal maneuvers such as slowing down for turns and exits, stopping at traffic lights and stop signs, etc. Because we have built our training stack to be end-to-end, turning the longitudinal training flag on “just worked”! The models are never explicitly told about traffic-lights or stop signs, and yet they have learned to obey them. That is the magic of end-to-end!

Driver Monitoring: steps toward end-to-end

To capture all the subtleties of driver behaviors, distracted or not, the driver monitoring (DM) model eventually needs to have an understanding of the whole scene instead of specific attributes of the driver. We started using the full frame of the comma three interior camera for DM (“full-frame DM”) not long ago, and that extra context enables us to try making holistic predictions of the driver’s state.

At comma, we try to avoid hand-labeled ground-truth, as it makes it very expensive to increase the size of the dataset. So what can be used as ground-truth for driver attention that can be easily extracted from our existing openpilot logs? Let us first take a step back and revisit the goal of DM, which is to answer the question “Is the driver ready to take over?”. With that in mind, it’s not difficult to think about asking the DM model to predict whether there will be any driver interactions (disengage, override, etc.) in the next x seconds, as all interactions with the car are logged. We can train a model to predict this probability of readiness. Additionally, we also want the DM model to know when the driver is blatantly not ready to take over, i.e. distracted. It is safe to assume that drivers pay much more attention to the road when the car is moving than while parked. Hence from this distribution shift the DM model will also learn to predict the probability of unreadiness.

Subtle distracted cases that hardly trigger with classical policy

These end-to-end DM predictions proved to be extremely good at catching distractions, even the subtle ones that easily slipped through the classical DM policy. But false positives can also be seen in some cases that don’t look like normal driving, but are common in the openpilot fleet, for example, when the driver is sitting at too chill of a position with their head tilted. This is an understandable mistake since this happens on open highway driving, usually with no driver interactions for a long time.

e2e thinks chill driver is distracted although he is looking at the road

To condition this better, we had a handful of images that were suspected to be distracted by the end-to-end model, labeled manually and then a helper model was trained to filter out the chill drivers from the real distractions. Finally the end-to-end DM ground-truths were regenerated using this helper model. This approach allowed us to leverage a large dataset while hand-labeling only a small fraction of that.

The DM model shipped in this release detects 15% more distractions from the end-to-end policy, with no extra false positives.

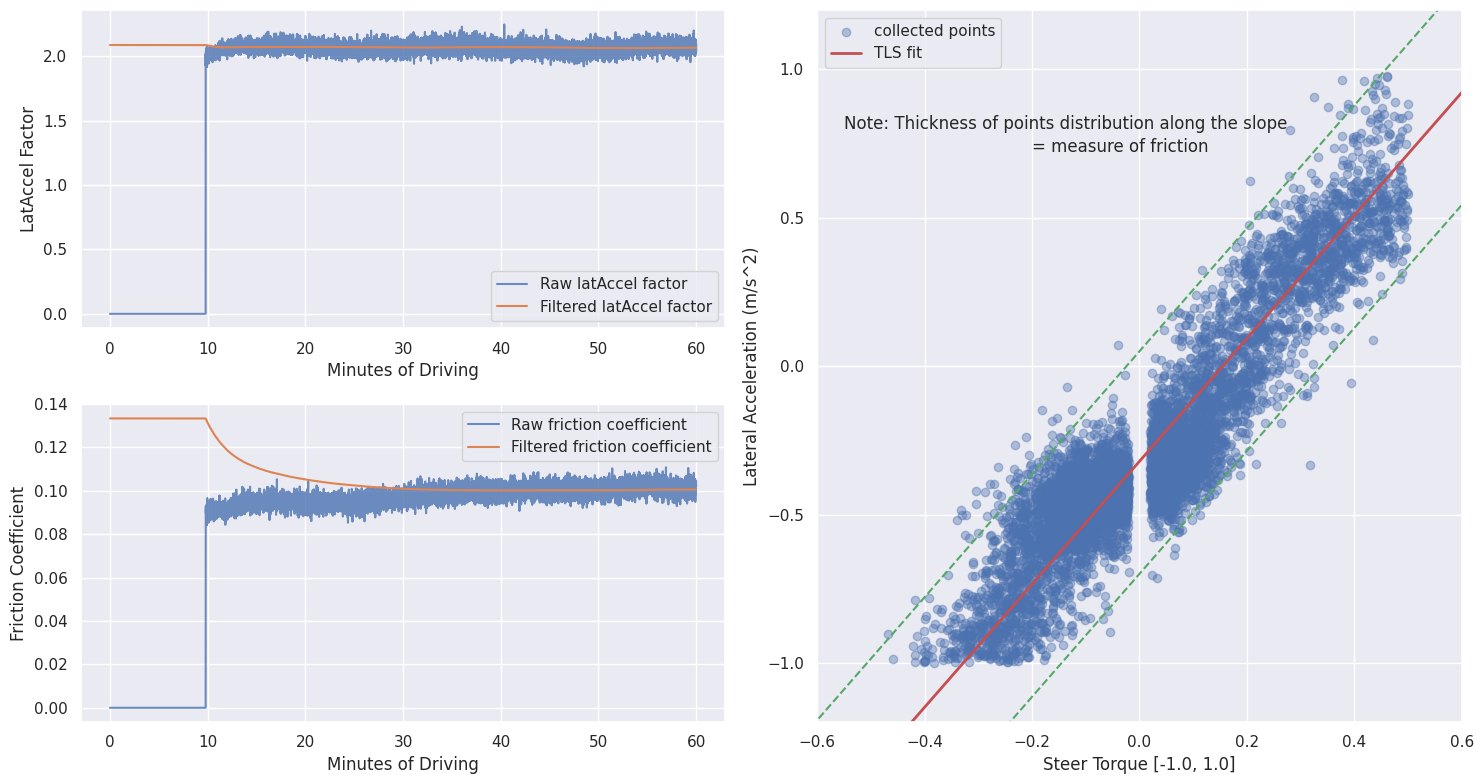

torqued: an auto-tuner for lateral control

In openpilot 0.8.15, we introduced a new controller based on the torque applied to the steering wheel. This allowed us to eliminate the need for finely tuned PID gains for many of our platforms. The key insight that made this possible was that the lateral acceleration of a vehicle has a near-linear relationship with the torque on the tire (after adjusting for the road bank), and the tire torque was linearly related to the steering torque command we send the car. In its initial release, we set the linear factors and friction coefficients from platform aggregates and enabled Torque Control on ~34% of our fleet.

In this release, we extended the coverage to ~60% of the fleet, almost all Toyota, Lexus, Hyundai, Kia, and Genesis models. We also realized that LatAccelFactor and FrictionCoefficient depend on a host of attributes other than the car platform such as tire wear, air in the tires, road quality, steering column, etc. The platform average offline values were not optimal for all cars on the same platform, so we created a new daemon called torqued that learns all these values live, for each individual car. We estimate a total-least-squares fit over an approximately i.i.d. collection of steer-torque and lateral-acceleration points (after adjusting for lag in the system). All learned values are then saved at the end of each route to be used in the next.

LiveTorqueParameters learner in action

This is a vital step in our dream of having an auto-tuner that removes the need for any subjective hand-tuning of values across the openpilot stack. In future releases, we plan to extend Torque Control to all cars with a torque interface, including ones with a non-linear steering torque command to tire torque relationship (looking at you, GM!).

comma connect

openpilot UI showing bookmark button on bottom left

The openpilot UI now has a bookmark button in the bottom left in the sidebar. Tap on it to create a bookmark which will show up in yellow in the connect timeline. (#25517, #25848, #237)

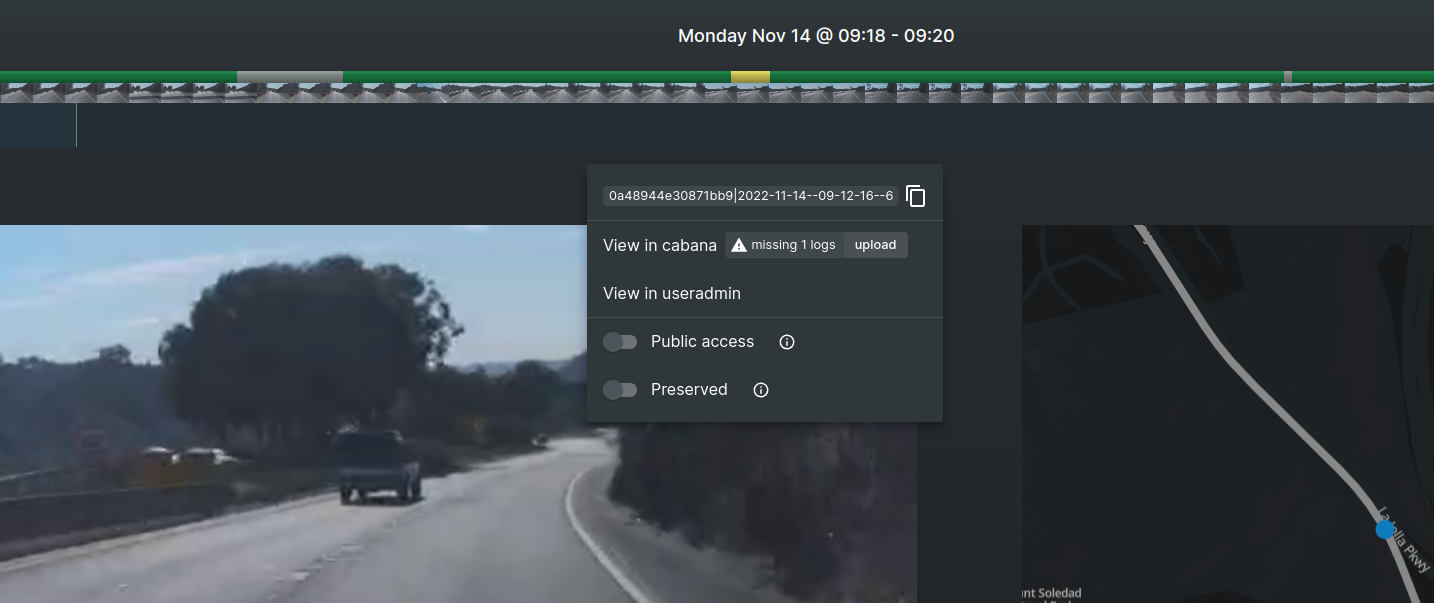

connect now also has easy shortcuts to preserve and share routes (#253, #269):

- You can preserve up to 10 routes so that the data doesn’t get deleted. With comma prime you can save 100!

- Make your route public to share your openpilot drive with anyone using the link.

connect showing preserve and make public shortcuts. Grey section in the timeline shows a user assisting openpilot, and yellow shows a bookmark

A useful tweak for users with many devices is that the device list is now finally sorted deterministically. Devices the user owns show up first and shared devices below, sorted by name and dongle ID. (#319).

navigation

We’ve also made some improvements to navigation in connect:

- Tapping on your car on the map will tell you its location and the last time an update was received from the device. (#251)

- Easily get directions back to your car with the new “open in maps” button

- You can use the “save as” button to favorite the location making it easy to navigate to in the future.

- Search now shows the distance to each result to make it easier to find a location close to you. (#242)

clips

First announced in the 0.8.15 blog post, clips are an easy way to export and share video clips of your drives with openpilot. The ability to create clips comes with a comma prime subscription. We’ve made some improvements:

- The share button now automatically makes the clip publicly accessible, while copying the link to your clipboard (#265)

- Added a “view clips” button to the device page to find your clips in less steps. (#287)

- The clips page has received a design refresh, while other improvements to the clip creation experience are coming soon. (#320)

UI updates

Experimental mode comes with a new driving visualization. The path reflects whether the model wants to apply the gas or brakes. The wide camera will also fade in at low speeds to show some turns that may be out of view for the narrow camera that’s usually shown.

Experimental mode slowing down for a turn

Aside from that, the border will now turn grey for steering override, and multi-language has been extended to navigation.

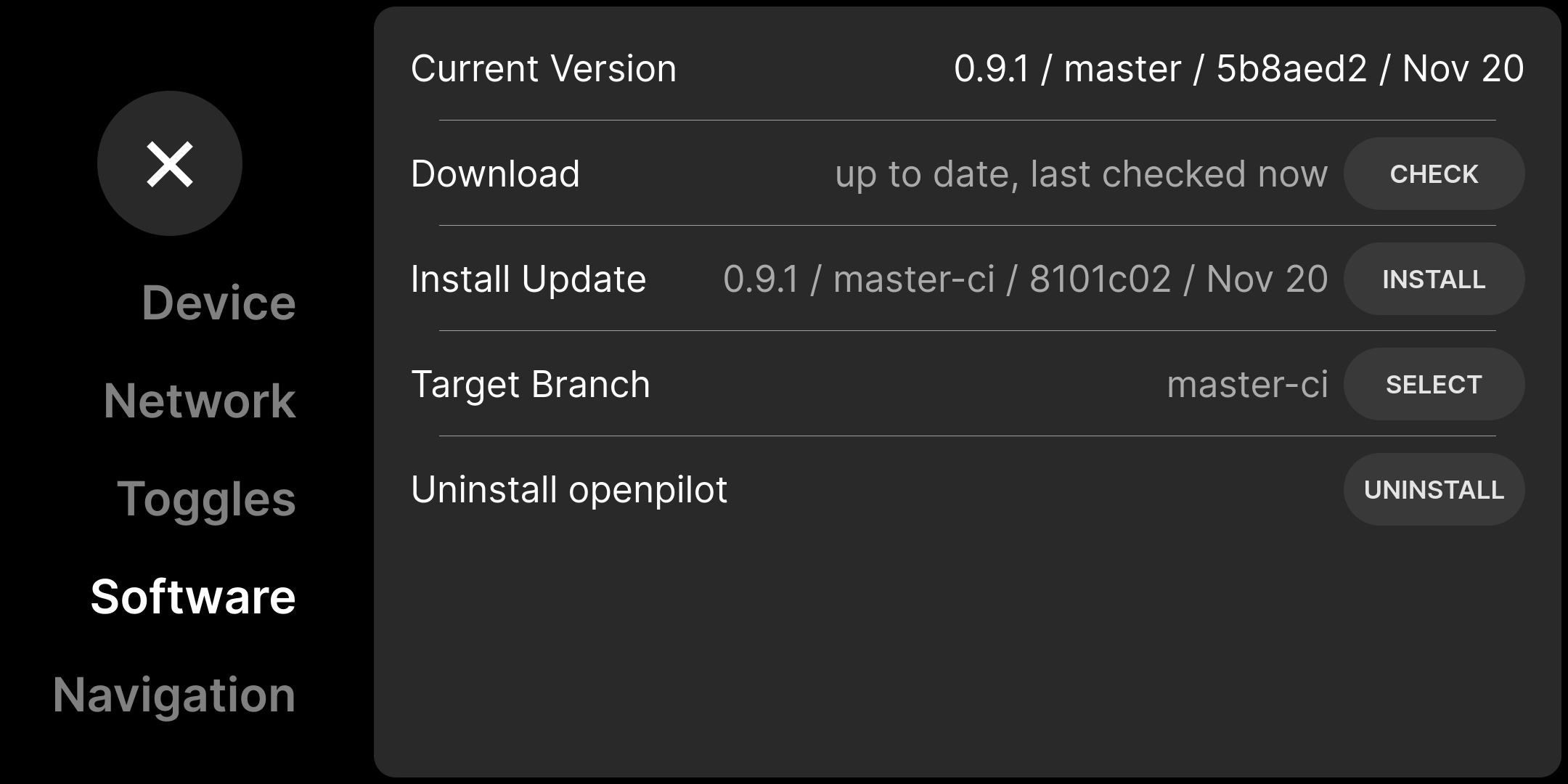

Improved updater experience

Previously, updates appeared, almost magically, shortly after we shipped releases in the form of a popup with the release notes. We’ve written a new UI around the updater to easily check for updates, download updates, and switch branches.

New updater UI showing the version, branch, commit, and commit date of both the current version and the downloaded update

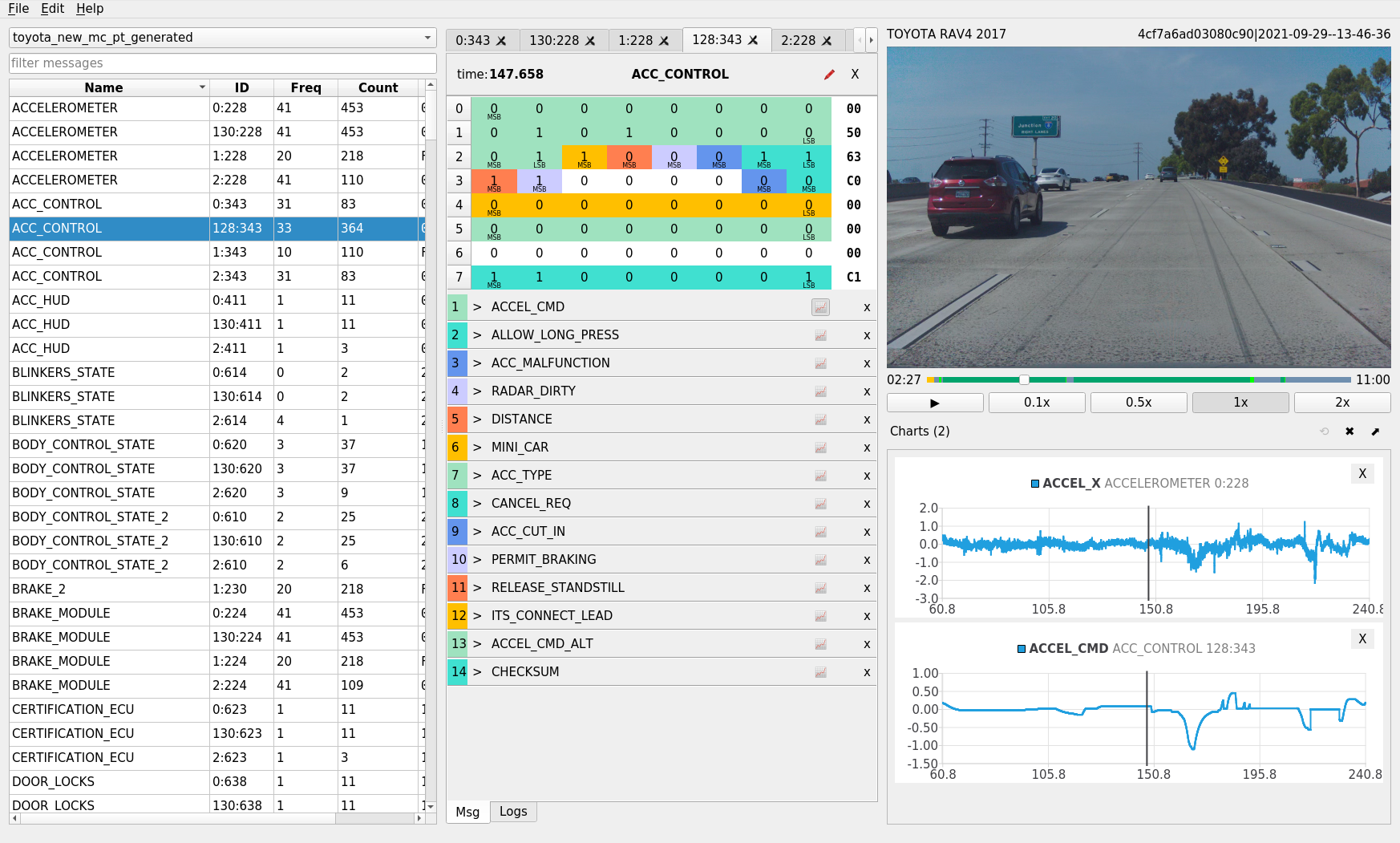

New cabana

Dean Lee, our most prolific contributor, has been hard at work on a re-write of cabana, our CAN bus analysis and reverse engineering tool. cabana originally launched as the web app companion to the panda, and it has been crucial in growing openpilot car support to over 200 models. However, cabana’s parallel JavaScript implementations of many openpilot components have become harder to maintain, as well as less performant due to the introduction of CAN-FD and its larger message sizes.

The new cabana is written in Qt and reuses a lot of openpilot. Soon, we’ll deprecate the web cabana and fully transition to the new one. Try it out on one of your own routes or with the demo route: tools/cabana/cabana --demo.

Cars

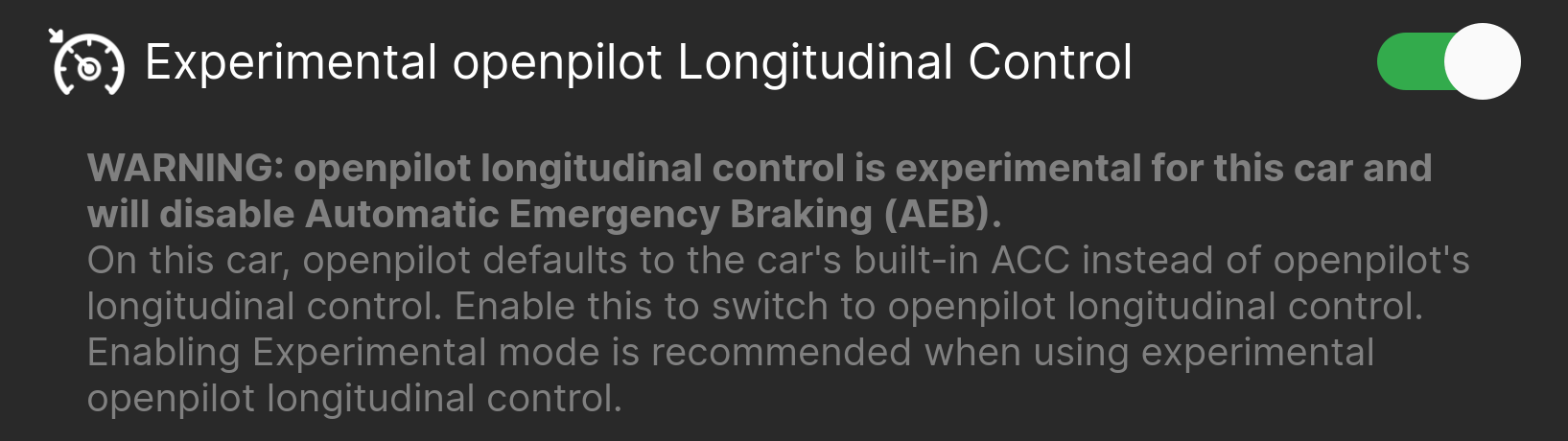

Experimental longitudinal control

You downloaded 0.9 and the experimental mode toggle is greyed out. What gives?

Until this release, openpilot’s ACC offered a similar enough experience to the one built into your car that support for openpilot longitudinal control wasn’t prioritized. Now that we’re shipping end-to-end longitudinal, we’re also introducing a new toggle to enable openpilot longitudinal control on cars that currently use stock ACC. The toggle exists both for cars that lack radar and as a staging area for longitudinal support that’s still under development. Once the end-to-end policy is good enough without a radar fallback, we’ll ship openpilot longitudinal control as the default on these cars.

Find the toggle under Settings -> Toggles

The toggle is not available on the release branch; to get the toggle, run a branch like devel for a release-like experience or master-ci for the most bleeding edge experience. Install with installer.comma.ai/commaai/devel as the software URL in the comma three setup.

For the full list and details of car capabilities, see docs/CARS.md. openpilot longitudinal control is coming to more cars and will be shipped behind the toggle first. Check out these open pull requests: RAM + Chrysler, Subaru, and new Honda Bosch.

Bug Fixes

- Fixed many GM and Chevy faults

- Improved Hyundai firmware query reliability

Enhancements

- Speeds shown by openpilot match the car’s dash

- Torque control for all Hyundai, Kia, and Genesis models

- Torque control for (almost) all Toyota and Lexus models

- Experimental longitudinal support for Volkswagen thanks to jyoung8607!

- Experimental longitudinal support for CAN-FD Hyundai, Kia, and Genesis

- Experimental longitudinal support for new GM and Chevy

Car Ports

- Genesis GV70 2022-23 support thanks to zunichky and sunnyhaibin!

- Hyundai Santa Cruz 2021-22 support thanks to sunnyhaibin!

- Kia Sportage 2023 support thanks to sunnyhaibin!

- Kia Sportage Hybrid 2023 support thanks to sunnyhaibin!

Join the team

We’re hiring great engineers to own and work on all parts of the openpilot stack. If anything here interests you, apply for a job or join us on GitHub!

Leave a comment